A few weeks ago I wrote a post for Slate arguing that it is time to consider developing—and maybe even using—democracy robots on Twitter. Preprogrammed messages released on a strategic schedule could have an impact on public opinion in sensitive moments for an authoritarian regime. The EFF’s eloquent Jillian York retorted “let’s not”.

In short, I argued that the other side is using social media armies and bots in their campaigns to manipulate the opinion of their publics, diasporas overseas, and even international opinion. Since authoritarian governments are investing in such technologies, D-bots could be an important part of a systematic response from the democracies that want to promote democracy.

Most of these crafty bots generate inane commentary and try to sell stuff, but some are given political tasks. For example, pro-Chinese bots have clogged Twitter conversations about the conflict in Tibet. In Mexico’s recent presidential election, the political parties played with campaign bots on Twitter. And even an aspiring parliamentarian in Britain turned to bots to appear popular on social media during his campaign. Furthermore, the Chinese, Iranian, Russian, and Venezuelan governments employ their own social media experts and pay small amounts of money to large numbers of people (“50 cent armies”) to generate pro-government messages, if inefficiently.

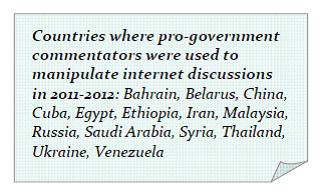

Freedom House’s recent Freedom on the Net report actually identifies a longer list of countries where paid commentators and account hijacking allows regimes to spread misinformation.

Of course, Ms. York is right to question how D-bots would get around the mechanisms that Twitter has for cutting down on the traffic that clogs up its networks.

How, exactly, would one (or a dozen) Twitter account(s) that tweet links to stories about democracy be effective in combating an army of Twitter accounts targeting individuals with propaganda? It would merely leave the conversation to the bots, all of which would quickly be relegated to spam by Twitter anyway: The social media platform’s algorithms are set up to detect repeated messages, while those not automatically detected can easily be reported by users. The alternative—targeting the bots to tweet at anti-American or anti-democratic accounts—is not likely to be any more effective.

And she makes the smart argument that the better strategy would be for average citizens who are proud of their democracies to take to social media. I wonder if a volunteer social media troop for having substantive interactions about democratic values with people living in authoritarian regimes would have as much impact as the paid armies of basiji militias, Russian youth nationalists or Chinese microbloggers.

The impact of d-bots or authoritarian a-bots would only ever be on the small population of elite technology users. This is an important subgroup however, especially in political sensitive moments like corruption scandals or rigged elections. The magic of social media is in interactivity, so simply blasting out links to news content is only the first step in disseminating ideas about how political life can be organized. And the research suggests that the most recalcitrant supporters of regimes should not be targeted by media campaigns precisely because they are set in their ways. Reaching out to mommy bloggers, poets, and sports enthusiasts may be the best way to draw larger numbers of people into political conversations they might not have otherwise.

Whether or not the State Department should be funding experiments in building d-bots may be a moot debate. One of the interesting trends we’ve seen is the surprising way in which average citizens in the West have been dedicating their own computing resources to support social movements in authoritarian countries. There are a growing number of tools to let average users build their own twitter bots. So in the next major political crisis in Russia, China, or Iran, will people be releasing their own bots?

The obvious question is credibility. I would assume that in most authoritarian countries the bots aren’t so much about manipulating opinion as about demarcating the party line and squelching discussion. Maybe there’s a huge population of truly-undecided people out there who think “maybe the government’s sock puppets have a point”, but mostly what they’re going to think is “OK, that’s the government position, so I’d better not say anything opposing it in public.” And opposing bots will do nothing to change that situation. What D-bots will do is bring some of the credibility loss over to the other side — think how many times you’ve seen yet another crucially important progressive message in your inbox and trashed it without reading.