Because voting machines contain computers that can be hacked to make them cheat, “Elections should be conducted with human-readable paper ballots. These may be marked by hand or by machine (using a ballot-marking device); they may be counted by hand or by machine (using an optical scanner). Recounts and audits should be conducted by human inspection of the human-readable portion of the paper ballots.”

Ballot-marking devices (BMD) contain computers too, and those can also be hacked to make them cheat. But the principle of voter verifiability is that when the BMD prints out a summary card of the voter’s choices, which the voter can hold in hand before depositing it for scanning and counting, then the voter has verified the printout that can later be recounted by human inspection.

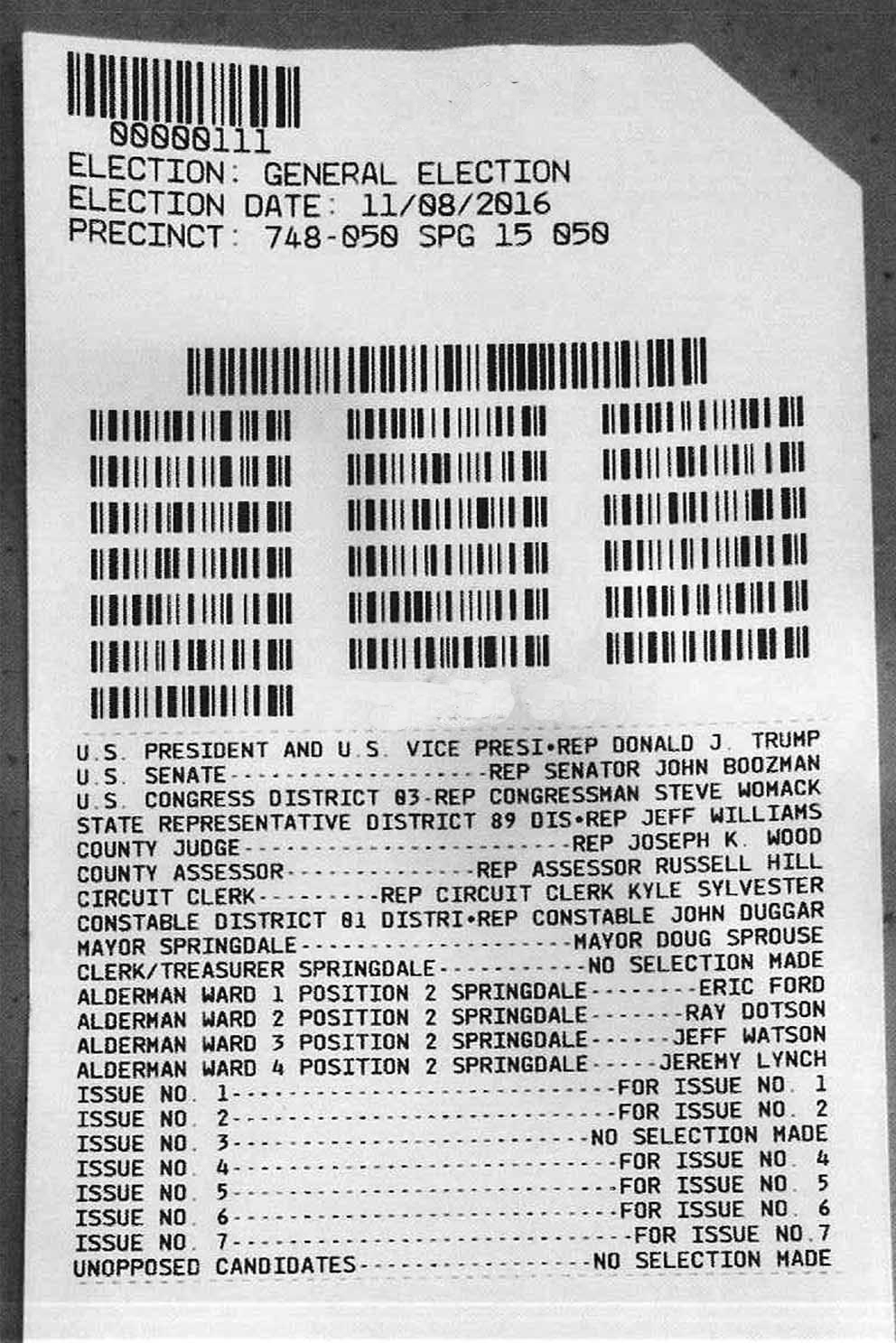

ExpressVote ballot card, with bar codes for optical scanner and with human-readable summary of choices for use in voter verification and in recount or audit.

But really? As a practical matter, do voters verify their BMD-printed ballot cards, and are they even capable of it? Until now, there hasn’t been much scientific research on that question.

A new study by Richard DeMillo, Robert Kadel, and Marilyn Marks now answers that question with hard evidence:

- In a real polling place, half the voters don’t inspect their ballot cards, and the other half inspect for an average of 3.9 seconds (for a ballot with 18 contests!).

- When asked, immediately after depositing their ballot, to review an unvoted copy of the ballot they just voted on, most won’t detect that the wrong contests are presented, or that some are missing.

This can be seen as a refutation of Ballot-Marking Devices as a concept. Since we cannot trust a BMD to accurately mark the ballot (because it may be hacked), and we cannot trust the voter to accurately review the paper ballot (or even to review it at all), what we can most trust is an optical-scan ballot marked by the voter, with a pen. Although optical-scan ballots aren’t perfect either, that’s the best option we have to ensure that the voter’s choices are accurately recorded on the paper that will be used in a recount or random audit.

Here’s how DeMillo et al. measured voter verification.

- In Gatlinburg, Tennessee they observed a polling place during a primary election in May 2018. There were several BMDs (ExpressVote), where voters produced their ballot card using a touchscreen, and a single optical scanner where voters then deposited their ballot cards. The observers were not close enough to see the voters’ choices, but close enough to see the voter’s behavior. 87 voters were observed, of whom 47% did not look at the ballot card at all before depositing it, and 53% reviewed the card for an average of 3.9 seconds. There were 18 contests on the ballot.

- Near Chattanooga, Tennessee during an August 2018 primary election, they conducted a kind of “exit poll”, at the required distance of 150 feet from the polling location. The voter was asked to review an unvoted optical-scan sample ballot, and was asked, “Is this the ballot you just voted on?”

- When the voter was given the correct ballot, 86% recognized as the right ballot, and 14% thought it was different from what they voted.

- When the voter was given a subtly incorrect ballot (completely different set of candidates either for House of Representatives, for County Commission, or for School Board), 56% wrongly said that this was the ballot they voted, and 44% said there was something wrong.

This strongly suggests that it is possible for a hacked BMD to cheat in just one contest (for Congress, perhaps, when there is a presidential race on the top of the ticket; or state legislator) and most voters won’t notice. Those who do notice can ask to try again on the touchscreen, and a cleverly hacked BMD will make sure not to cheat the same voter again.

In summary, “Can voters effectively verify their machine-marked ballots?” No. “Do voters try to verify their machine-marked ballots?” No.

As DeMillo et al. write, “Given the extent to which test subjects are known . . . to agree with an authoritative-sounding description of events that never happened, the use of recall in [BMD-marked ballots] deserves further evaluation. . . . The [BMD-marked ballot] is not a reliable document for post-election audits.”

And therefore, voters should mark optical-scan bubbles on their paper ballot, with a pen.

References

What Voters are Asked to Verify Affects Ballot Verification: A Quantitative Analysis of Voters’ Memories of Their Ballots, by Richard DeMillo, Robert Kadel, and Marilyn Marks, SSRN abstract 3292208, November 2018.

There is also related work on whether voters notice changes on the “review screens” of touchscreen DRE voting machines. That’s not quite the same thing, because a hacked DRE can also cheat on its review screen. Still, the related work also finds that voters are not very good at noticing errors:

The Usability of Electronic Voting Machines and How Votes Can Be Changed Without Detection, by Sarah P. Everett, PhD Thesis, Rice University, 2007.

Now do voters notice review screen anomalies? a look at voting system usability. By Bryan A. Campbell and Michael D. Byrne, in 2009 USENIX/ACCURATE Electronic Voting Technology Workshop/Workshop on Trustworthy Elections (EVT/WOTE) (Montreal, Canada, 2009).

Broken Ballots: Will Your Vote Count?, (section 5.6) by Douglas W. Jones and Barbara Simons, CSLI Publications, 2012.

The Vote-o-graph: Experiments with Touch Screen Voting, by Douglas W. Jones, 2011.

If only it were so simple. The cited study is at best suggestive, but hardly conclusive. I have to wonder whether this was peer-reviewed at all. It’s poorly written and has many errors in the references; the scholarship is definitely poor. More importantly, though, the research design provides little basis for the conclusions presented. The question is definitely not “answered” and this study does not provide “hard evidence.”

Fist, as Professor Gilbert noted, the ES&S ballot cards are horribly designed. If I were trying to design something to make it intentionally difficult to read, the first place I’d start would be with monospaced all caps, which makes perceptual discrimination extremely difficult. I don’t blame voters for not paying much attention to this, I blame the poor design. If you want people to do something, make it easy for them to do it.

Second, the recognition comparison was the kind of thing I’d use in an undergraduate psych course as an example of how NOT to design or execute a memory experiment. Here’s a partial list of problems: (1) Non-random sample, (2) No evidence that those sampled took the task seriously–they claimed that time was important in Study 1 but didn’t measure time in Study 2? Seriously? How many of the volunteers who got it wrong looked at the test ballot for less than a few seconds? WHY WAS THIS NOT RECORDED AND REPORTED? (3) No evidence that voters voted on, and therefore paid attention those specific races, and not surprisingly so: one race was non-contested, which voters are strongly incentivized to ignore, and the other race was literally the bottom of the ballot. (4) Memory is fragile and HIGHLY subject to decay. Measuring this accurately has to be done right after the vote, not later on, outside the polling place, with no information about exactly how long the lag was between cast and memory test. Unless they actually followed voters out of the polling place, they don’t actually know what the lag was.

Overall this study was neither well-designed nor well-executed. If I were reviewing this for publication in a journal, I would almost certainly reject the paper.

Furthermore, this paper does not AT ALL answer the question whether voters CAN verify appropriately. It (at best) suggests that they _don’t_, but says nothing whatsoever about whether they can or can’t.

Don’t get me wrong, the ability of voters to verify the output of a BMD is a really serious concern. I’d definitely sleep better if I knew people would really verify accurately if they’re given a well-designed ballot. But I don’t know, and nobody else does, either. It would be fantastic for someone to do some good research that helps understand this better.

Regardless of the outcome of that research, hand-marked paper has other problems, which need to be carefully weighed against the benefits of good BMDs (of which the ES&S system here is perhaps not).

The first serious problem with hand-marked paper is accessibility. Hand-marked paper is pretty much a disaster for people with visual impairments, motor impairments, and especially for those with both. Having most people vote on paper and a subset vote on something else violates equal protection. See also https://civicdesign.org/why-not-just-use-pens-to-mark-a-ballot/

The second problem is voter error. Really well-designed GUIs are superior to paper in their ability to accurately capture voter intent. If your security infrastructure compromises the quality of the data, it’s not really secure. Admittedly, most commercial DREs aren’t well-designed enough to beat paper, but we know it can be done.

The third problem is administration. Hand-marked paper is extremely difficult to deploy in jurisdictions that require multiple languages and want to support vote centers, especially if that jurisdiction is large. The only remotely viable solution here is print-on-demand, which has high power requirements and thus ironically renders polling places vulnerable to DoS attacks by cutting power. It’s also complex to administer, and furthermore the BOD system itself is also a potential attack target.

Hand-marked paper is NOT a panacea. Neither are BMDs! The idea that we somehow know the answer to which one of these is actually best prior to doing the science is premature.

But do consider this: we KNOW that elections in the U.S. (including a Presidential election!) have been decided on the basis of poor ballot usability. We cannot say the same for poor computer security (at least, I don’t think so—please correct me if I’m wrong). The idea that security concerns are somehow more important than usability concerns is a mistake. (It’s also a mistake to think the other way, but that seems pretty rare to me.) The suggestion that it’s not OK for a hacker to _potentially_ alter an election, but it’s totally OK to deploy things that we _know_ compromise usability, is not the path that best serves the goal of ensuring election integrity. And isn’t THAT the real goal?

How would the “cleverly hacked BMD… make sure not to cheat the same voter again”?

Equally cleverly hidden facial recognition hardware and software, able to determine if someone has come to any of the locstoion’s BMDs earlier in the day in a fraction of a second or biometric scanners that read retinas and fingerprints from across a room to give itself time to determine whether or not a voter had cast a ballot earlier.

One simple way is, “don’t cheat twice within 10 minutes.”

Also, in many states, the voter inserts a bar-code or smart-card “authorization to vote” into the BMD, so the BMD will know it’s the same voter.

This is excellent to quantify. Related question: has there been any research on the speed of voting or throughput, and cost of hand marked ballots with optical scans versus touchscreen voting machines? I *think* that the paper ballots would go quicker and be much cheaper, but some science behind that would be great to know.

Yes. Hand-marked paper is definitely not faster. There’s some suggestion in our data that DREs are faster, but it’s not conclusive. As far as we can tell, reading speed is a bigger determinant of the time it takes to fill out a ballot than is what technology is used.

As for cheaper, if the DRE used is paperless (which is terrible and nobody should do this, but many do), it’s cheaper. Handling paper at all increases administration costs. Now, whether hand-marked opscan is cheaper than BMDs is not clear.

The “voting machine results tapes” shown on this post (and also on the post I was linked from)

show no evidence of election workers (at least three signatures) signing same (before the tapes

were removed from their respective machine(s)). Sadly if these tapes were brought into a court of law

as evidence for a case….a good judge would have to treat same as non-evidence, hear-say, etc

and possibly dismiss the case…etc.

The printout shown on this post is not a “results tape”, it is a “ballot.” Therefore no signatures are expected.

This article introduces a much needed study on Ballot-Marking Devices (BMD), however, it doesn’t tell the entire story. Here are some additional points to consider:

The ES&S ExpressVote ballot summary is poorly designed. It’s very hard to read. This doesn’t help voters verify their marks. I haven’t studied this, but based on my experience, I am very confident that this design will result in errors for people reviewing them. In our work with Prime III, we double-space the ballot summary and we only left-justify the summary. Here are some samples from a Prime III ballot:

1. President and Vice-President ==> Joseph Barchi and Joseph Hallaren (B)

2. US Senate ==> John Hewetson (O)

3. US Representative ==> No_Selection

This design will result in easy to read ballot summaries.

I am not surprised that voters cannot remember the ballot. Cognitively, that’s a burden. I would also say that for hand-marked ballots, most voters don’t care about every ballot contest/item, therefore, they aren’t as tuned in on those items either way. Essentially, it doesn’t matter if it’s hand-marked or machine-marked followed by human-verification, if they don’t care, they will make mistakes and not notice.

The 2008 Minnesota Senate race used hand-marked paper ballots and there was chaos. The chaos was a result of the voter’s intent not being captured accurately. Several ballots were thrown out. Voters made marks on the ballot that the scanning machines could not read, but humans would argue as legitimate marks. We have seen this in multiple hand-marked ballot elections where the human marks are not correctly interpreted by the optical scanners and/or the human auditors during recounts. Therefore, voters assume their ballot is correct, but have no idea. With a properly designed ballot summary produced by a BMD, this cannot, and will not, happen.

I think it’s possible that voters can verify ballot summaries that are done well. Additional research studies are needed to validate this claim, but when you compare the options of hand-marked paper ballot being accurately scanned by the optical scanner and accurately interpreted by humans vs. the accuracy of the ballot summary produced by a BMD, it’s clear to me that the BMD has an advantage. BMDs can eliminate these discrepancies.

Another thought is that the assumption is that the BMD has corrupt code or errors in the code that change the voters’ selections. This begins with the premise that the software is compromised and cannot be identified as compromised. In our work, we have proposed that the BMD software be transparent and stateless, meaning it can run from a readonly device. If there are errors in the software, it cannot change itself, it will be discovered. If it’s transparent and open, then people can verify the code as well. With appropriate testing, these errors can be identified and corrected. I plan to conduct some additional studies in this area, stay tuned…

I agree with you on many points regarding BMD ballot readability, but I disagree with your characterization of the 2008 Minnesota Senate race. I wrote about that race here:

https://freedom-to-tinker.com/2009/01/21/optical-scan-voting-extremely-accurate-minnesota/

In a contest with 2.4 million ballots cast, only 234 ballots were considered ambiguous. That’s not “chaos.”

Thank you, Dr. Appel for this clear substantiated explanation of why a Ballot-Marking Device should NOT be used by ALL voters to mark their ballot as it does not ensure that the voters intended votes will actually chose the winners of elections. Thank you continuing to work at explaining why the only way to ensure that the winners of elections are the choice of the voters is for voters AS THE FIRST STEP in the voting process need to hand-mark their votes on paper ballots. “Paper trails” are not enough.