This post and the paper is jointly authored by Shaanan Cohney, Ross Teixeira, Anne Kohlbrenner, Arvind Narayanan, Mihir Kshirsagar, Yan Shvartzshnaider, and Madelyn Sanfilippo. It emerged from a case study at CITP’s tech policy clinic.

As universities rely on remote educational technology to facilitate the rapid shift to online learning, they expose themselves to new security risks and privacy violations. Our latest research paper, “Virtual Classrooms and Real Harms,” advances recommendations for universities and policymakers to protect the interests of students and educators.

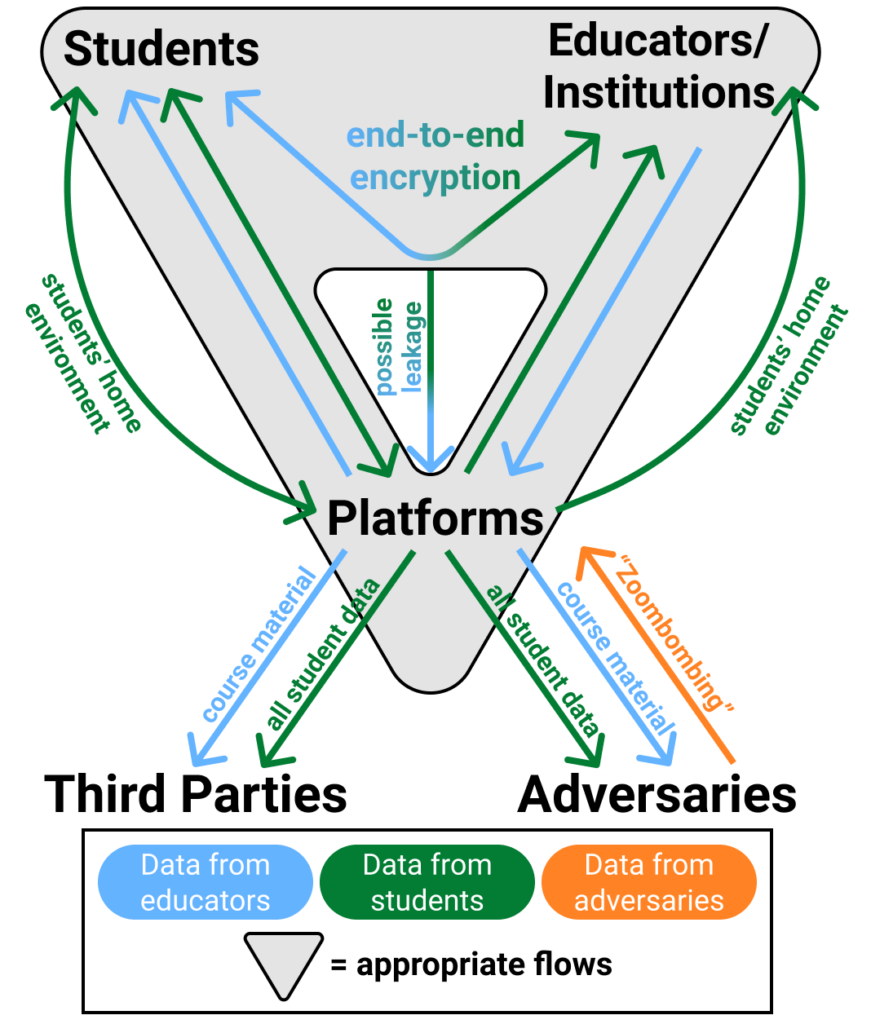

The paper develops a threat model that describes the actors, incentives, and risks in online education. Our model is informed by our survey of 105 educators and 10 administrators who identified their expectations and concerns. We use the model to conduct a privacy and security analysis of 23 popular platforms using a combination of sociological analyses of privacy policies and 129 state laws (available here), alongside a technical assessment of platform software.

In the physical classroom, there are educational norms and rules that prevent surreptitious recording of the classroom and automated extraction of data. But when classroom interactions shift to a digital platform, not only does data collection become much easier, the social cues that discourage privacy harms are weaker and participants are exposed to new security risks. Popular platforms, like Canvas, Piazza, and Slack, take advantage of this changed environment to act in ways that would be objectionable in the physical classroom—such as selling data about interactions to advertisers or other third parties. As a result, the established informational norms in the educational context are severely tested by remote learning software.

We analyze the privacy policies of 23 major platforms to find where those policies conflict with educational norms. For example, 41% of the policies permitted a platform to share data with advertisers, which conflicts with at least 21 state laws, while 23% allowed a platform to share location data. However, the privacy policies are not the only documents that shape platform practices. Universities use Data Protection Addenda (DPAs) for the institutional licenses that they negotiate with the platform to supplement or even supplant the default privacy policy. We reviewed 50 DPAs from 45 Universities, finding that the addenda were able to cause platforms to significantly shift their data practices, including stricter limits on data retention and use.

We also discuss the limitations of current federal and state regulation to address the risks we identified. In particular, the current laws lack specific guidance for platforms and educational institutions to protect privacy and security and have limited penalties for noncompliance. More broadly, the existing legal framework is geared toward regulating specific information types and a small subset of actors, rather than specifying transmission principles for appropriate use that would be more durable as the technology evolves.

What can be done to better protect students and educators? We offer the following five recommendations:

- Educators should understand that there are significant differences between free (or individually licensed) versions of software and institutional versions. Universities need to work on informing educators about those differences and encourage them to use institutionally-supported software.

- Universities should use their ability to negotiate DPAs and institute policies to make platforms modify their default practices that are in tension with institutional values.

- Crucially, universities should not spend all their resources on a complex vetting process before licensing software. That path leads to significant usability problems for end users, without addressing the security and privacy concerns. Instead, universities should recognize that significant user issues tend to surface only after educators and students have used the platforms and create processes to collect those issues and have the software developers rapidly fix the problems.

- Universities should establish clear principles for how software should respect the norms of the educational context and require developers to offer products that let them customize the software for that setting.

- Federal and state regulations can be improved by making platforms more accountable for compliance with legal requirements, and giving institutions a mandate to require baseline security practices, much like financial institutions have to protect consumer information under the Federal Trade Commission’s Safeguards Rule.

The shift to virtual learning requires many sacrifices from educators and students already. As we integrate these new learning platforms in our educational systems, we should ensure they reflect established educational norms and do not require users to sacrifice usability, security, and privacy.

We thank the members of Remote Academia and the university administrators who participated in the study. Remote Academia is a global Slack-based community, that gives faculty and other education professionals a space to share resources and techniques for remote learning. It was created by Anne, Ross, and Shaanan.