At a recent Stanford-MIT-Princeton workshop, experts highlight the need for legal protections, standardized evaluation practices, and better terminology to support third-party AI evaluations.

On October 28, researchers at Stanford, MIT, Princeton’s Center for Information Technology Policy, and Humane Intelligence convened leaders from academia, industry, civil society and government for a virtual workshop to articulate a vision for third party AI evaluations. The workshop spanned three sessions exploring evaluations in practice, evaluations by design, and evaluation law and policy, beginning with a keynote from Rumman Chowdhury, CEO at Humane Intelligence.

This post covers the second half of the workshop. Read the previous post for the first half.

You can watch the full workshop here.

Session 3. The Design of Evaluations Can Draw From Audits and Software Security

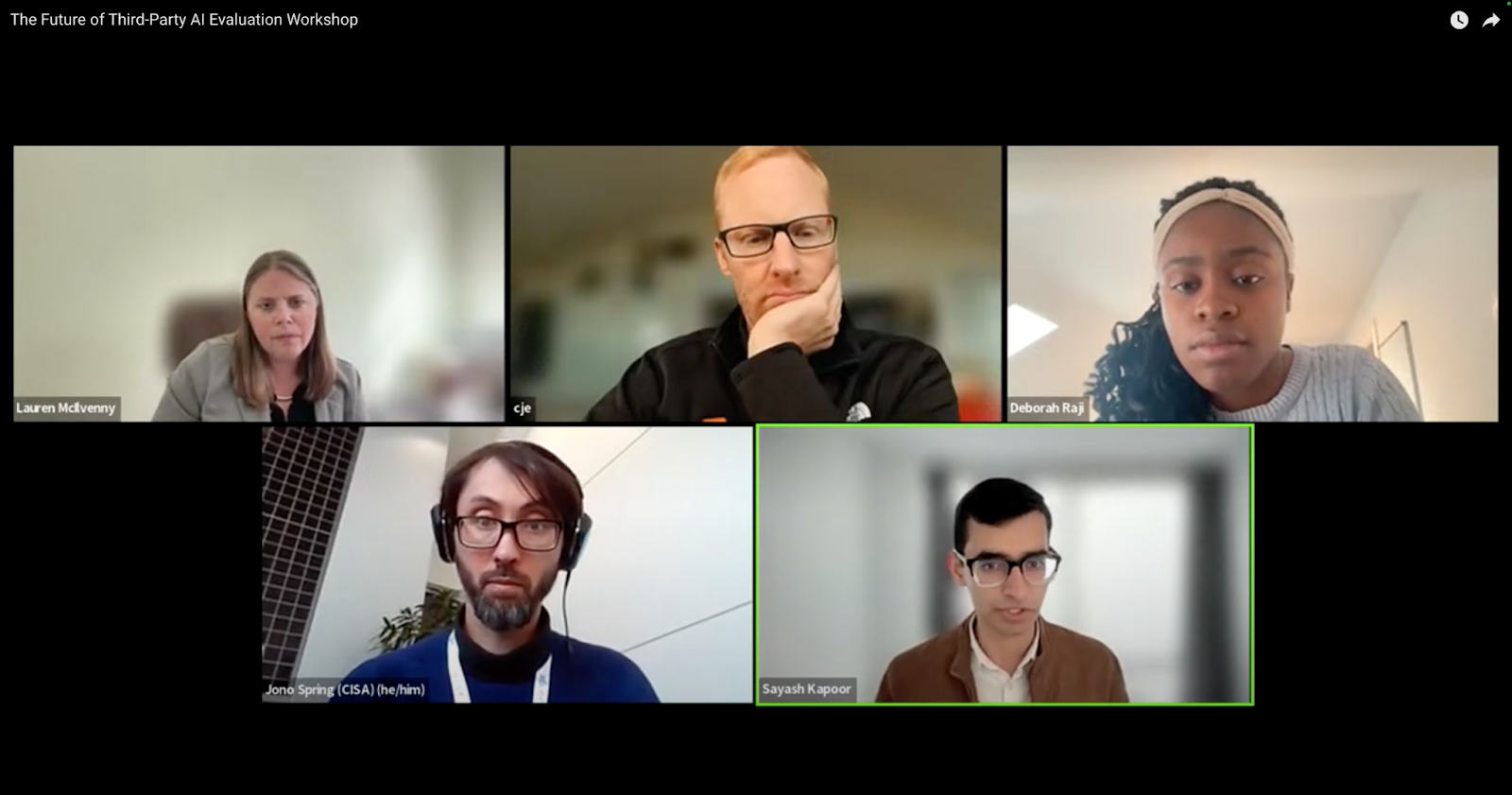

The second panel provided insights on the design of third party evaluations, featuring presentations by Deb Raji, Fellow at the Mozilla Foundation and PhD Candidate at Berkeley; Casey Ellis, Chairman and Founder of Bugcrowd; Jonathan Spring, Deputy Chief AI Officer at CISA; and Lauren McIlvenny, Technical Director and CERT Threat Analysis Director at the AI Security Incident Response Team (AISIRT) at CMU.

- Deb Raji’s opening remarks [1:53:03]

- Casey Ellis’s opening remarks [2:09:54]

- Jonathan Spring’s opening remarks [2:18:54]

- Lauren McIlvenny’s opening remarks [2:28:36]

Raji highlighted a number of challenges that make AI audits challenging based on her experiences as an auditor and researcher. She described that AI auditors face a variety of different barriers, including a lack of protection against retaliation, little standardization of the audit and certification process, and little accountability of the audited party. In her role at Mozilla, she shaped the development of open-source audit tooling, and argued that more capacity-building and transparency is needed when it comes to audit tools. In terms of institutional design of audit systems, she pointed to case studies from other domains such as the FDA that may be instructive for the AI space. She called for an expansion of the audit scope to include entire sociotechnical systems, a wider variety of methodologies and tooling, and maintaining clear audit targets and objectives, while also recognizing the limits of audits and calling for a prohibition on certain uses of AI systems in some cases.

As the founder of Bugcrowd, a company that offers bug bounty and vulnerability disclosure programs, Ellis contended that discovering AI vulnerabilities is very similar to discovering software vulnerabilities. He mentioned the need to build a consensus around common language use to shape law and policy effectively. For example, software security researchers tend to use the term “security,” whereas AI researchers tend to use the term “safety.” Further, in his view, vulnerabilities will be an issue in any system — they are unavoidable, so the best we can do is to prepare for them. He argued that AI can be used as a tool to increase attacker productivity, as a target to exploit weaknesses in a system, and as a threat via unintended security consequences because of the use and integration of AI.

Drawing on his work as Deputy Chief AI Officer at the U.S. Cybersecurity and Infrastructure Security Agency (CISA), Spring laid out seven concrete goals for security by design: (1) increase use of multi-factor authentication, (2) reduce default passwords, (3) reduce entire classes of vulnerability, (4) increase the installation of security patches by customers, (5) publish a vulnerability disclosure policy, (6) demonstrate transparency in vulnerability reporting, and (7) increase the ability for customers to gather evidence of cybersecurity instructions affecting their products. He made the case that how the community addresses AI vulnerabilities should align with, and be integrated with, how cybersecurity vulnerabilities are managed, including as part of CISA’s related work.

In line with Spring, McIlvenny stressed the similarities between software security and AI safety. She described the longstanding practices of CVD for software vulnerabilities, noting that AI vulnerabilities are more complex because vulnerabilities can reside anywhere across an AI system – not only in the AI model, but also other software surrounding it – and that the culture of the AI community does not share the software engineering history of cybersecurity practices. She stressed that AI is software, and software engineering matters: “If secure development practices were followed, about half of those vulnerabilities that I’ve seen in AI systems would be gone.” She also highlighted the need for coordination – between those who discover a problem and those who can fix it – and urged participants to focus on fixing problems rather than arguing about definitions.

Session 4. Shaping Law and Policy to Protect Evaluators

The third panel focused on the law and politics of third party AI evaluations, featuring Harley Geiger, Coordinator at the Hacking Policy Council; Ilona Cohen, Chief Legal and Policy Officer at HackerOne; and Amit Elazari, Co-Founder and CEO at OpenPolicy.

- Harley Geiger’s opening remarks [3:14:05]

- Ilona Cohen’s opening remarks [3:27:27]

- Amit Elazari’s opening remarks [3:37:39]

Taking a big picture view, Geiger said that law and policy will shape the future of AI evaluation, and existing laws on software security need to be updated to accommodate AI security research. He noted gaps in the current legal landscape, explaining that while existing software covers security testing for software, laws need to explicitly cover non-security AI risks – such as bias, discrimination, toxicity, and harmful outputs – to prevent researchers from facing undue liability. He stressed the importance of consistent terminology and clarifying legal protections for third party evaluation and the developing a process for flaw disclosure, and mentioned that real-life examples of how research has been chilled would be very helpful in advocating for legal protections.

Cohen agreed on the importance of shared terminology, noting that she uses the term “AI security” to describe a focus on protecting AI systems from the risks of the outside world, whereas she uses “AI safety” to describe a focus on protecting the outside world from AI risks. She shared success stories of third party AI evaluations, noting that HackerOne’s programs have helped minimize legal risks for participants and made foundation models safer. Cohen argued that red teaming is more effective the more closely it mimics real-world usage. She also provided a brief overview of the fractured landscape of AI regulation.

Rounding out the speakers’ remarks, Elazari stressed the unique moment we are in given that we are still shaping the definitions and concepts such as AI and red teaming. She highlighted the power of policy work, noting that for security research, she sees success in expanded safe harbor agreements alongside a movement for bug bounties and vulnerability disclosures. For the AI space, she argued that “we have this gap between an amazing moment where there is urgency around embracing red teaming, but not enough focus on creating the protections [for third party evaluators] that we need.” She closed with a powerful message urging participants to help shape law and policy for the better: “This is a call to action to you […] There is a lot of power in the community coming together.”

Watch the Workshop:

Ruth E. Appel is a researcher at Stanford University and holds a Master’s in CS and PhD in Political Communication from Stanford, and an MPP from Sciences Po Paris.

Speak Your Mind