With the recently-introduced iOS 8, Apple has switched to a encrypting a much larger amount of user data by default. Matt Green has provided an excellent initial look at a technical level and big-picture level and Apple has recently released a slightly more detailed specification document and an admirable promise never to include backdoors. This move, and Google’s prompt promise to follow suit with Android, are big news. They’ve even garnered criticism from the director of the FBI and re-kindled debate about mandatory key escrow, which, as has been pointed out, is a debate the tech community seriously discussed for the last time while listening to Vanilla Ice on a cassette player in the early 90s.

It’s now 2014 and we have ample experience demonstrating that intentional backdoors are unacceptably risky and vulnerability-prone. More encryption without backdoors is a good thing and Apple should be commended for continuing to have their users’ backs. However, I’d like to sound an important note of caution though about the strength of Apple’s encryption against a determined (read: governmental) attacker:

Security is only as good as your device password is.

Encryption makes security into a matter of key management, and since iOS keys are solely derived from a password, iOS encryption makes security all about your password.

This will be a lengthy technical post, but the essential point is that security still relies on passwords, which often aren’t very good. Built-in hardware support can limit the number of guesses somebody who’s taken your phone can attempt to try to recover your data, which is a fundamental improvement over purely software encryption. Unfortunately, recent research on passwords suggests that Apple has set this rate-limiting too low, likely leaving a substantial proportion of users still at risk.

The goal of encryption is forcing the adversary to guess your password

There are many ways an attacker can try to get your data, the easiest of which may still be going after the backup copy in iCloud which is turned on by default and is out of the user’s control. Assuming you turn that feature off or trust Apple to keep your backed-up data secure, an attacker will need physical access to your phone. If they get your phone while it’s powered on (but locked), much of your data (and the keys used to encrypt it) will be available to the phone. This is a defensible design trade-off: without it, the device might need to demand your password much more often and this could get annoying. It might be nice if users had the option of these keys being wrapped more often, but we’ll leave that to the side for now [1].

There are a still a number of tricks to get your data out without your passcode. Jonathan Zdziarski has done great work discovering these over time, and though Apple has patched many holes with iOS 8, we should assume memory on a powered-on device will continue to be vulnerable [2]. Critically, almost all of your data is tied to a key decrypted only when you initially turn the phone on (see pp 13,19 here). Another approach is to simply unlock a powered-on phone by fooling the default unlock mechanism Touch ID (the fingerprint reader), which can evidently be done using latent fingerprints and relatively simple tools. In general, we can conclude that:

encryption can reliably protect you only if your device is seized while turned off

If your phone is seized while powered off, the adversary will try to copy all of the memory from the device (this is called “imaging” the device). Traditionally, this has been straightforward with a number of forensic tools readily available to dump memory. Apple has worked to make this harder, but with encryption it doesn’t matter: your important data can’t be extracted from the raw disk image without their decryption keys, specifically the “Class keys” used to encrypt various types of data. This is the real benefit to encryption: an attacker can’t just image memory, they need to interact with your phone to unwrap the Class keys.

Class keys are themselves encrypted while stored and only decrypted after booting the device by combining your password and your device’s “Unique ID” (UID), a secret key burned into your device forever. This all happens within a cryptographic coprocessor (the “Secure Enclave”) which is designed to make it as difficult as possible to extract the key. Preventing physical extraction of the key is hard against a sophisticated attacker [3], but for the purposes of this post we’ll assume this is properly designed and implemented.

So, an attacker who seizes your phone will have to trick the Secure Enclave into checking a large number of guesses at your password. There are a few ways to do this, but generally the attacker will want to boot the device with their own firmware or OS. There’s a complicated secure boot sequence designed to make this difficult, but we’ll assume this can be defeated by finding a security hole, stealing Apple’s key to sign alternate code, or coercing Apple into doing so.

Finally, the core security impact of all this hardware-supported encryption is that somebody who’s taken your phone can’t try decrypting your data with guessed values for your password as fast as they can (a fullyoffline attack). They have to go through your device’s coprocessor which has your UID stored internally.

It’s worth stopping here to point out that it’s already a major win if you’re forcing an attacker to go through these steps.

Where it can still go wrong: assuming user passwords are a uniform distribution

So given access to the Secure Enclave coprocessor, how hard challenging will it be for an attacker to guess your password? The coprocessor is deliberately designed to be slow at unwrapping encrypted keys. Apple’s documentation gets a little fuzzy here, with two different limits provided. The coprocessor’s key derivation function is designed to take at least 80 ms to compute due to computational reasons (it’s an iterated hash), and the Secure Enclave is also supposed to wait 5 seconds between attempts. It’s not clear what an attacker would be able to achieve, and Apple later uses the 80 ms time. Either way, the upshot of encryption is that an attacker who’s stolen your phone can try to guess your password at either a rate of 12 guesses per second (or to be technical, a rate of 12.5 Hz), or 1 guess every 5 seconds (0.2 Hz).

Apple claims in their document (see p.11) that even at the faster 12.5 Hz rate “it would take more than 5½ years to try all combinations of a six-character alphanumeric passcode with lowercase letters and numbers.” We’ve all been taught to pick 8-character passwords or longer, and 5 years is a long time, so that sounds pretty good!

Unfortunately, Apple has committed what’s probably the fundamental error in reasoning about passwords: modeling a human-chosen password distribution as a small-ish uniform distribution. Sure, human selection doesn’t actually produce a uniform distribution, but people also often choose longer than 6-character passwords. So a 6-character password seems like a fair approximation.

However, human chosen distributions are so highly skewed that approximation with a uniform breaks down completely. My PhD thesis centered around why this approximation fails and this is a classic example.

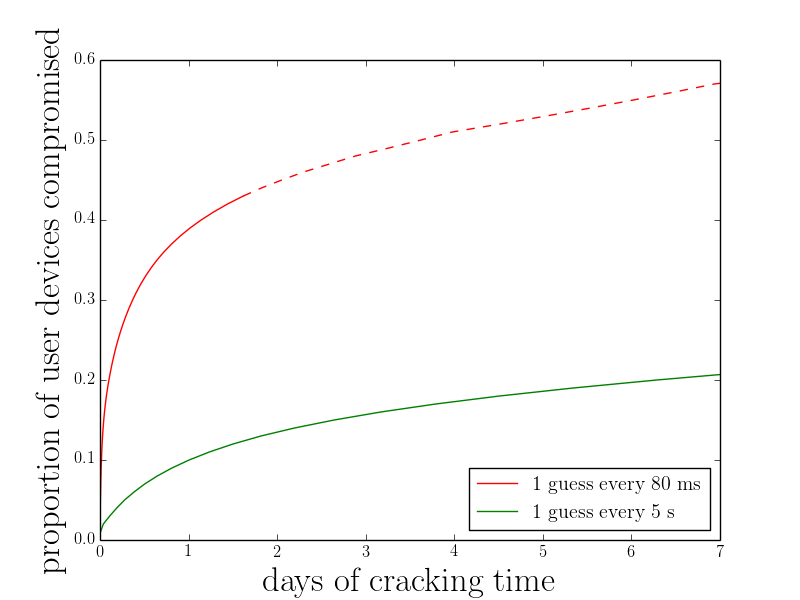

The best way to see this is this simple plot. I believe the best dataset on the distribution of user-chosen passwords we have is one I collected in cooperation with Yahoo! in 2011 [4], so we’ll use that. This figure shows, assuming users choose passwords to protect their iOS data like they choose them to protect their Yahoo! accounts, how many days of cracking are required per device (at a guess rate of either 12.5 Hz or 0.2 Hz) to compromise some proportion of user’s devices. The dashed portion of the line is a statistical projection, the details of which are in my thesis.

The results are vastly different than we’d guess for a 6-character uniform distribution: at 12.5 Hz over half of devices would be compromised in a week, and over a third would be compromised in less than 24 hours! 24 hours at 12.5 Hz works out to over 1 million guesses per day, enough to start guessing a serious proportion of user’s passwords. Even at the slower rate of 0.2 Hz, around 20% of users would fall in a week. Compromising all users is impossible at either guessing rate, because some users choose genuinely strong passwords. But many users will no doubt be vulnerable.

We have no evidence to suggest users choose iOS passwords stronger than this

It’s certainly possible this analysis is flawed because users choose much stronger passwords to protect their devices than they do their web accounts. We have no hard data either way. Based on years of research and analyzing dozens of password distributions, however, I strongly doubt it. I’ve never seen a human-chosen password distribution substantially stronger than that which I collected at Yahoo! and used here.

There’s also some reason to believe iOS device passwords will be worse than web passwords. Entering passwords on a touchscreen is painful, and a recent study has found that this leads users to choose weaker passwords than they do when using a full-size keyboard.

The system defaults users to choosing only a 4-digit PIN

The above analysis of password strength is optimistic for another reason: by default, iOS devices are configured to be locked with a “Simple Passcode” which is only a 4-digit PIN. Once again, we have data showing that users pick a highly-skewed distribution of 4-digit PINs, but that doesn’t even matter here. The entire space of 10,000 possible PINs can be exhausted in just over 13 minutes at 12.5 Hz or 14 hours at 0.2 Hz. The average user will be at most half of this, and of course it will be faster in practice due to non-uniform user choices.

In any case, users with any Simple Passcode have no security against a serious attacker who’s able to start guessing with the help of the device’s cryptographic coprocessor.

Serious security requires absolute guess count limits, not rate limits

It’s hard to ask for Apple to slow down rate-limiting to much less than 1 guess/second, as this would result in an annoying delay for users. Even this rate would leave a significant proportion of users vulnerable. The best approach is probably for the Secure Enclave to store an absolute counter of consecutive incorrect guesses, after which memory will be erased. This is a bit tricky, as you need UI to seriously warn legitimate users that they’re at risk of erasing their data with more incorrect guesses. You probably want some rate-limiting as well to prevent users erasing a borrowed phone quickly without knowing the true passcode. This is also a bit more complicated engineering-wise, as the counter must be stored in the crypto coprocessor. And youstillcan’t protect everybody. A hard limit of 100 guesses would leave about 3% of users vulnerable if we go by the Yahoo! approximation used above.

But that’s the best one can hope for, and that design should be considered security engineering best practice for hardware-protected password encryption.

It’s probably not practical to use fingerprint data to bolster user passwords

Another approach is to combine the user’s passcode with a value extracted from their fingerprint, as many recent iOS device incorporate Apple’s Touch ID fingerprint sensor. Currently, it appears Apple is not using this to protect encrypted data [5]. The likely reason for this is that people’s fingerprints can change and become unreliable to scan, and it’s not acceptable if data is lost permanently as a result.

It’s hard to overcome this limitation. Apple claims instead that Touch ID can help by allowing users to choose a strong passcode without entering it every time, using their fingerprint to quickly unlock in normal cases.

Paranoid users can protect themselves

If you want to keep your data secure, two main steps are necessary. First, make sure your phone doesn’t get taken while powered on. This is hard, of course, but you can take steps like powering down before you cross an international border. Second, don’t just make your password up. You need true randomness, and hopefully around 40 bits of it. This can be expressed as a 12-digit random number, or a 9-character string of lowercase letters. These aren’t trivial to memorize, but the vast majority of humans can do this with practice. Also, critically, don’t use your iOS device password anywhere else that might leak it. And if possible wipe your screen down carefully before the device gets seized to defend against smudge attacks.

Device encryption is still a great step, but it requires great user care

In summary, I applaud Apple for enabling more device encryption by default. However, encryption is not a magic bullet. It certainly protects users from amateur attackers who only attempt to read data using the phone’s installed software and don’t know the unlock code, but these attackers would be foiled even if the data wasn’t encrypted on disk.

Against an attacker able to copy the raw memory from a powered-off phone, it’s not a far jump to assume they can talk directly to the crypto coprocessor to guess passwords. Against this level of attacker, any user choosing a 4-digit PIN (the default) will have their data compromised and a large number of users choosing a longer passcode will as well due to poor user choices of passwords.

I hope the next generation of phones will fix this by changing the limits on guesses. And I sincerely hope that modelling user-chosen passwords as a uniform distribution becomes as out-of-style as thinking key escrow is a good idea.

Thanks to Ed Felten, Arvind Narayanan, Matthew Green and Matthew Smith for comments on drafts of this post. Any mistakes are mine alone.

[1] It would be great if Apple provided users the choice to have more Class keys wiped from memory whenever the phone locks, at the cost of having to enter their full password more often. They don’t and the current specification appears not to support that.

[2] A fundamental challenge appears to be that Apple wants devices to be able to synch with iTunes when plugged in without the user unlocking them, necessitating that many keys are kept in memory.

[3] See this classic survey for a discussion of the challenges of making tamper-resistant processors. In general this area is full of trade-secrets, NDAs and secret audits making public data difficult to find. There don’t appear to be any public details about how well Apple’s A7 processors have implemented a tamper-resistant coprocessor. For this post, I’ve simply assumed this is done reasonably well.

[4] This dataset was collected without ever seeing any user’s password in plaintext, as described in Section IV of this paper on the dataset.

[5] Apple’s spec is not completely clear on whether fingerprint data is used in encryption (see p. 11 here), but it appears it is not. They don’t explicitly state that it is, and elsewhere they state that “The passcode can always be used instead of Touch ID” to unlock the device.

You’re so awesome! I do not suppose I’ve read anything like that before.

So wonderful to find another person with unique thoughts

on this subject matter. Seriously.. many thanks for starting this up.

This website is something that is required on the web, someone

with a little originality!

> powering down before you cross an international border.

Ah, is this the real reason TSA requires powering-up devices to carry them on? Interesting…

Interesting blog entry, but there is at least one mistake: according to the linked Apple security document, iOS 8 does support an absolute guess count. If you go to Settings->Touch ID and Passcode there is a switch that enables erasing the phone after 10 failed attempts to enter a passcode. This is disabled by default, unfortunately; it might be good to enable it automatically for users who select a 4 digit passcode. Also, the rate count is automatically limited to .2 Hz (not 12.5 Hz) after an unspecified number of failed unlock requests. This obviously doesn’t solve the problems of unwise defaults, but at least users have the option of changing them, depending on their level of paranoia.

Ugg Jimmy Choo Boots 3D, VR, And Immersive Tech: The Perfect Storm 3D, VR, And Immersive Tech: The Perfect Storm Neil Schneider Gets His Start In 3D Neil Traces The History Of Oculus Rift And Vireio Perception Neil On Where Immersive Technology Is Heading The Game Development And Entrepreneurship Program’s Project Demonstrations UGG Boots Outlet Game Demonstrations Using Immersive Technologies Hardware: Cutting-Edge Immmersive Technologoies At UOIT The U Of OIT Has A Lot Of Cool Immersive Technology Resources Fewer than four years have passed since 3D Blu-ray technology was first made available. Before that, the only stereoscopic movies you could buy included a UGG Boots Free Shipping handful of anaglyph (red/blue) DVDs.

And true 3D-capable televisions were not really available in the consumer space. Although it’s true that the new media format didn’t explode like some anticipated/hoped it would, at least 3D aficionados now have fairly mainstream access to hardware and content that most people didn’t even dream of in 2007. That was the year I wrote my first stereoscopic 3D article, Wall-Sized 3D Displays: The Ultimate Gaming Room. In order to put that piece together, I used a 1024×768 DLP projector with an 85 Hz refresh rate.

It was completely reliant on eDimensional’s extremely buggy drivers to play games, and those were about all the setup could handle; there simply were no 3D movies to buy. Now, 3D Blu-rays discs are on the shelves at Wal-Mart and the Oculus Rift is nearing consumer availability.Back in 2007, 3D was in the hands of hardcore fringe enthusiasts, and reliable information was very had to come by. My research always led me back to the forums at Meant To Be Seen (mtbs3d.

com), an organization that claims to be the world’s first stereoscopic 3D certification and advocacy group. MTBS was started and presided over by Sale UGG Boots Neil Schneider, a gentleman who is tenaciously passionate and driven when it comes to immersive technology. In an indirect way, he had a hand in the Oculus Rift story. John Carmack (co-creator of the iconic Doom PC game franchise) first contacted Palmer Luckey (the Rift’s young inventor) on the MTBS forums.

I contacted Neil by email once when I was researching an article, and was immediately impressed with his breadth of knowledge and willingness to share it. We keep in touch and occasionally discuss developments in 3D and virtual reality hardware. Neil is now the manager of immersive technology services at the University of Ontario Institute of Technology, and he gave me a standing invitation to drop by if I was ever in the neighborhood.Was I interested in a personal tour of new cutting-edge immersive technologies such as the Oculus Rift, Epson’s Moverio augmented-reality glasses, and the Virtual Reality Cave? Would I like to check out what students in the Game Development and Entrepreneurship program come up with? For a 3D geek like me, all of that sounded like a rare opportunity.

I gladly took Neil up on his offer earlier this year during a business trip to Toronto.

Rather than a constant delay between guesses, perhaps the hardware should enforce an increasing delay with each wrong guess before it will process the next one. That way, one or two mistaken entries won’t punish the user, but brute-force attempts get a curve even worse than the constant 12 seconds per attempt line you calculated.

Would it make sense to borrow a rate-limiting technique from all the pay-to-really-play game apps? Say, every 50 wrong guesses costs you a 12-hour delay? Depending on the status of backups, wiping a phone should really be a last-ditch situation, and not something that could be done accidentally or deliberately for DoS.

I’ve always wished that holding down the lock button on an iOS device to trigger the power-down prompt should wipe the crypto keys from memory. It’s not something that would inconvenience the user, but would provide a quick way to get security analogous to shutting down the device in 3-5 seconds.