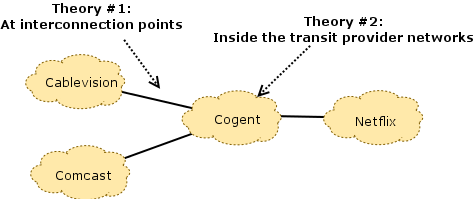

In my post last week, I explained how Netflix traffic was experiencing congestion along end-to-end paths to broadband Internet subscribers, and how the resulting congestion was slowing down traffic to many Internet destinations. Although Netflix and Comcast ultimately mitigated this particular congestion episode by connecting directly to one another in a contractual arrangement known as paid peering, several mysteries about the congestion in this episode and other congestion episodes that persist. In the congestion episodes between Netflix and Comcast in 2014, perhaps the biggest question concerns where the congestion was actually taking place. There are several theories about where congestion was occurring; one or more of them are likely the case. I’ll dissect these cases in a bit more detail, and then talk more generally about some of the difficulties with locating congestion in today’s Internet, and why there’s still work for us to do to shed more light on these mysteries.

Theory #1: At interconnection points. The first theory is the commonly held conventional wisdom that seems to be both widely acknowledged and at least partially supported by available measurement data. Both Comcast and Netflix appear to acknowledge congestion at this point in the network, in particular. The two sides differ in terms of their perspectives on what is causing those links to congest, however:

Theory #1: At interconnection points. The first theory is the commonly held conventional wisdom that seems to be both widely acknowledged and at least partially supported by available measurement data. Both Comcast and Netflix appear to acknowledge congestion at this point in the network, in particular. The two sides differ in terms of their perspectives on what is causing those links to congest, however:

- Netflix asserts that, by paying their transit providers, they have already paid for access to Comcast’s consumers (the “eyeballs”), and that it is the responsibility of Comcast and their transit providers to ensure that these links are provisioned well enough to avoid congestion. The filings assert that Comcast “let the links congest” (effectively, by not paying for upgrades to these links). While it is technically true that, by not upgrading the links, Comcast essentially let the links congest, but to suggest that Comcast is somehow at fault for negligence is somewhat disingenuous. After all, they have bargaining power, so it’s expected (and rational, economically speaking) that they are using that leverage when it comes to a peering dispute, regardless of whether flexing their muscles in this way is a popular move.

- Comcast, on the other hand, asserts that Netflix has enough traffic to congest any link they please, at any time, as well as the flexibility to choose which transit provider they use to reach Comcast. As a result, the assertion is that they have a traffic “sledgehammer” of sorts, that could be used to exert tactics from Norton’s Peering Playbook (Section 9): congesting Comcast’s interconnection to one transit provider, forcing Comcast to provision more capacity, then proceeding to congest the interconnection with another transit provider until that link is upgraded, and so forth. This argument is a bit more of a reach, because it suggests active attempts to degrade all Internet traffic through these shared points of congestion, simply to gain leverage in a peering dispute. I personally think this is unlikely. Much more likely, I think, is that Netflix actually has a lot of traffic to send and has very little idea what capacity actually exists along various end-to-end paths to Comcast (particular in their transit providers, and at the interconnects between their transit providers and Comcast). There is no fool-proof way to determine these capacities ahead of time because data about capacity within the transit providers and at interconnection points that they do not “own” is actually quite difficult to come by.

Theory #2: In the transit providers’ networks. This (seemingly overlooked) theory is intriguing, to say the least. Something that many forget to factor into the timeline of events with the congestion episodes is Netflix’s shift from Akamai’s CDN to Level 3 and Limelight; by some accounts Netflix’s shift to a different set of CDNs coincides with the Measurement Lab data documenting extreme congestion, beginning in the middle of 2011. This was, some say, a strategy on the part of Netflix to reduce their costs of streaming video to their customers, by as much as 50%. That is a perfectly reasonable decision, of course, except that it appears that some transit providers that deliver traffic between these CDNs and Comcast subscribers may have been ill-equipped to handle the additional load, as per the congestion that is apparent in the Measurement Lab plots. It is thus entirely likely that congestion occurred not only at the interconnection points between Comcast and their transit providers, but also within some of the transit providers themselves. An engineer at Cogent explicitly acknowledges their ability to handle the additional traffic in an email on the MLab-Discuss list:

“Due to the severe level of congestion, the lack of movement in negotiating possible remedies and the extreme level of impact to small enterprise customers (retail customers), Cogent implemented a QoS structure that impacts interconnections during the time they are congested in February and March of 2014…Cogent prioritized based on user type putting its retail customers in one group and wholesale in another. Retail customers were favored because they tend to use applications, such as VoIP, that are most sensitive to congestion. M-Labs is set up in Cogent’s system as a retail customer and their traffic was marked and handled exactly the same as all other retail customers. Additionally, all wholesale customers traffic was marked and handled the same way as other wholesale customers. This was a last resort effort to help manage the congestion and its impact to our customers.”

This email acknowledges that Cogent was prioritizing certain real-time traffic (which happened to also include Netflix traffic). If that weren’t enough of a smoking gun, Cogent also acknowledges prioritizing Measurement Lab’s test traffic, which independent analysis has also verified, and which artificially improved the throughput of this test traffic. Another mystery, then, is what caused the return to normal throughput in the Measurement Lab data: Was it the direct peering between Netflix and Comcast that relieved congestion in transit networks, or are we simply seeing the effects of Cogent’s decision to prioritize Measurement Lab traffic (or both). Perhaps one of the most significant observations that points to congestion in the transit providers themselves is that Measurement Lab observed congestion along end-to-end paths between their servers and multiple access ISPs (Time Warner, Comcast, and Verizon), simultaneously. It would be a remarkable coincidence if the connections between the access ISPs and their providers experienced perfectly synchronized congestion episodes for nearly a year. A possible explanation is that congestion is occurring inside the transit provider networks.

What does measurement data tell us about the location of congestion? In short, it seems that congestion is occurring in both locations; it would be helpful, of course, to have more conclusive data and studies in this regard. To this end, some early studies have tried to zero in on the locations of congestion, with some limited success (so far).

Roy Observations of Correlated Congestion Events. In August 2013, Swati Roy and I published a study analyzing latency data from the BISmark project that highlighted periodic, prolonged congestion between access networks in the United States and various Measurement Lab servers. By observing correlated latency anomalies and identifying common path segments and network elements across end-to-end paths that experienced correlated increases in latency, we were able to determine that more than 60% were close to the Measurement Lab servers themselves, not in the access network. Because the Measurement Lab servers are hosted in transit providers (e.g., Level 3, Cogent), one could reasonably assume that the congestion that we were observing was due to congestion near the Measurement Lab servers—possibly even within Cogent or Level 3. This study was both the first to observe these congestion episodes and the first to suggest that the congestion may be occurring in the networks close to the Measurement Lab servers themselves. Unfortunately, because the study did not collect traceroutes in conjunction with these observations, it was unable to attribute the congestion episodes to specific sets of common links or ASes, yet it was an early indicator of problems that might exist in the transit providers.

Measurement Lab Report. In October 2014, the Measurement Lab reported on some of its findings in an anonymous technical report. The report concludes: “that ISP interconnection has a substantial impact on consumer Internet performance… and that business relationships between ISPs, and not major technical problems, are at the root of the problems we observed.”

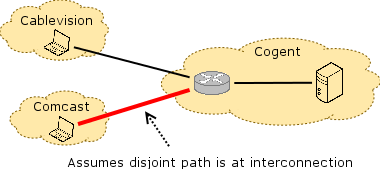

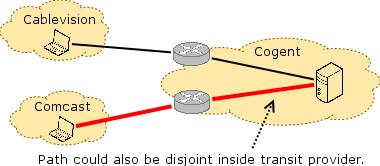

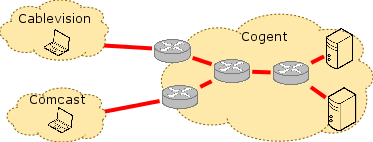

Unfortunately, this conclusion is not fully supported by the data or method from the study; the method that the study relies on is too imprecise to pinpoint the precise location of congestion. The method works as shown in the figure. Clients perform throughput measurements along end-to-end paths from vantage points inside access networks to Measurement Lab servers that are sitting in various transit ISPs. The method assumes that if there is no congestion along, say, the path between Cablevision and Cogent, but there is congestion on the path between Comcast and Cogent, then the point of congestion must be at the interconnection point between Comcast and Cogent. In fact, this method is not precise enough eliminate other possibilities: Specifically, because of the way Internet routing works, these two end-to-end paths could actually traverse a different set of routers and links within Cogent, depending on whether traffic was destined for Comcast or Cablevision. Unfortunately, this report also failed to consider traceroute data, and the vantage points from which the throughput measurements were taken are not sufficient to disambiguate congestion at interconnection points from congestion within the transit network. In the figures below, assume that the path between a measurement point in Comcast and the MLab server in Cogent is congested, but that the path between a measurement point in Cablevision and the server in Cogent is not congested. The red lines show conclusions that one might draw for possible congestion locations. The figure on the right illustrates why the MLab measurements are less conclusive than the report leads the reader to believe.

The MLab report concludes that if the Comcast-Cogent path is congested, but the Cablevision-Cogent path is not, then the congestion is at the interconnection point. The MLab report concludes that if the Comcast-Cogent path is congested, but the Cablevision-Cogent path is not, then the congestion is at the interconnection point. |

In fact, the cause for the congestion might be the interconnection point, but the location of congestion might also be on a disjoint link that is inside Cogent. In fact, the cause for the congestion might be the interconnection point, but the location of congestion might also be on a disjoint link that is inside Cogent. |

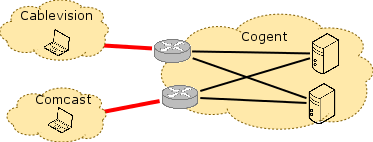

The report also concludes that because traffic along paths from Verizon, Comcast, and Time Warner Cable to measurement points inside of Level 3 occurred in synchrony at different geographic locations, that the problems must be at interconnection points between these access providers and Level 3. It is unclear how the report reaches this conclusion. Given that multiple access providers see similar degradation, it is also possible that the Level 3 network itself is experiencing congestion that is manifesting at multiple observation points as a result of under-provisioning. Without knowing the vantage points where the measurements were taken from, it is difficult to disambiguate multiple correlated congestion events at interconnects from one or more congestion points that are internal to the transit network itself. In summary, the report’s conclusion that “[the data] points to interconnection-related performance degradation” is simply not conclusively supported by the data.

The MLab report concludes that if the paths between multiple access providers and multiple MLab servers in the transit provider are congested, then interconnection between the networks is the location of congestion. The MLab report concludes that if the paths between multiple access providers and multiple MLab servers in the transit provider are congested, then interconnection between the networks is the location of congestion. |

In fact, it is equally likely that this congestion is occurring on one or more links inside the transit provider. There might even be a single link inside the transit provider that causes congestion on these multiple end-to-end paths. In fact, it is equally likely that this congestion is occurring on one or more links inside the transit provider. There might even be a single link inside the transit provider that causes congestion on these multiple end-to-end paths. |

CAIDA Report on Congestion. In November 2014, researchers from CAIDA published a paper exploring an alternate method for locating congestion. The idea, in short, is to issue “ping” messages to either side of a link along an end-to-end Internet path and observe differences in the round-trip times of the replies that come back from either side of the link. This method appears to point to congestion at the interconnection points, but it depends on being able to use the traceroute tool to accurately identify the IP addresses on either side of a link. This, in fact, turns out to be much more difficult than it sounds: because an interconnection point must be on the same IP subnet, either side of the interconnection point with have an IP address from one of the two ISPs. The numbering along the path will thus not change on the link itself, but rather on one side of the link or the other. Thus, it is incredibly tricky to know whether the link being measured is actually the interconnection point or rather one hop into one of the two networks. We explored this problem in a 2003 study (See Figure 4, Section 3.3), where we tried to study failures along end-to-end Internet paths, but our method for locating these interconnection points is arguably ad hoc. There are other complications, too, such as the fact that traceroute “replies” (technically, ICMP time exceeded messages) often have the IP address of the interface that sends the reply back to the source on the reverse path, not the IP address along the forward path. Of course, the higher latencies observed could also be on a (possibly asymmetric) reverse path, not on the forward path that the traceroute attempts to measure.

The paper provides some evidence to suggest that congestion is occurring at interconnection points (Theory #1), but it is inconclusive (or at least silent) as to whether congestion is also occurring at other points along the path, such as within the transit providers. To quote the paper: “The major challenge is not finding evidence of congestion but associating it reliably with a particular link. This difficulty is due to inconsistent interface numbering conventions, and the fact that a router may have (and report in ICMP responses) IP interface addresses that come from third-party ASes. This problem is well understood, but not deeply studied.” In the recent open Internet order, the FCC also acknowledged its inability to pinpoint congestion:

“We decline at this time to require disclosure of the source, location, timing, or duration of network congestion, noting that congestion may originate beyond the broadband provider’s network and the limitations of a broadband provider’s knowledge of some of these performance characteristics…While we have more than a decade’s worth of experience with last-mile practices, we lack a similar depth of background in the Internet traffic exchange context.”

Where do we go from here? We need better methods for identifying points of congestion along end-to-end Internet paths. Part of the difficulty in the above studies is that they are trying to infer properties of links fairly deep into the network using indirect (and imprecise) methods. The lack of precision points mainly to the poor fidelity of existing tools (e.g., traceroute), but the operators of these ISPs clearly know the answers to many of these questions. In some cases (e.g., Cogent), the operators have conceded that their networks are under-provisioned for certain traffic demands and have not properly disclosed their actions (indeed, Cogent’s website actually has a puzzling and apparently contradictory statement that it does not prioritize any traffic). In other cases, ISPs are understandably guarded about revealing the extent (and location) of congestion in their networks—perhaps justifiably fearing that divulging such information might put them at a competitive disadvantage. To ultimately get to the bottom of these congestion questions, we certainly need better methods, some of which might involve ISPs revealing, sharing, or otherwise combining information about congestion or traffic demands with each other in ways that still respect business sensitivities.

Have you filed this in the FCC record? Open proceedings include 14-57 the Comcast TWC merger and 14-90 the AT&T / Direct TV merger.

Isn’t the point here that Comcast could easily answer this question but doesn’t?

That’s mostly the point, in a word.

Longer version:

Certainly they know exactly to what extent the interconnects are congested. I believe their FCC filings actually acknowledge that the interconnect is congested. What is not public (among other things) is the traffic mix on those links, especially over time. The claim that Peering Playbook tactics were used sounds pretty extreme to me, but it is not so farfetched that it has never happened, so even that is hard to prove or disprove without some data.

There is another piece of the puzzle that even Comcast doesn’t know for sure: they can’t definitively say whether there is also congestion inside of Cogent with their own data—the MLab data turned out to help, in this case. The MLab-Discuss list reflects some of that sleuthing: https://groups.google.com/a/measurementlab.net/forum/#!topic/discuss/UTCbtSaMt9c

But yes, in general, it’s fair to say that the answers to these questions are known—yet nobody really has the full picture, and different parties are loathe to share their pieces of the picture, perhaps with good reason (hence, finger pointing ensues).

Thanks Nick for an informative post and especially for the discussion that ensued. I have to admit I didn’t make it through all of it; but that is a personal fault on my end. Skimming and scanning after Theory #2 was laid forth; including in the comments I come to this seemingly gem of information.

“But yes, in general, it’s fair to say that the answers to these questions are known—yet nobody really has the full picture, and different parties are loathe to share their pieces of the picture”

That seems to me to be the most truthful answer. Why are they so loathe to share their pieces? (is my question) Could it be bad PR? Or, could it be something deeper; motivation by money they can extract from others? I don’t know; but the whole topic reminds me of a poem. I realize this poem has nothing to do with the topic at hand; but by sharing this poem, I hope to express my own frustration with the entire industry as I have seen it regarding this topic.

The Cold Within

Six humans trapped by happenstance

In bleak and bitter cold.

Each one possessed a stick of wood

Or so the story’s told.

Their dying fire in need of logs

The first man held his back

For of the faces round the fire

He noticed one was black.

The next man looking ‘cross the way

Saw one not of his church

And couldn’t bring himself to give

The fire his stick of birch.

The third one sat in tattered clothes.

He gave his coat a hitch.

Why should his log be put to use

To warm the idle rich?

The rich man just sat back and thought

Of the wealth he had in store

And how to keep what he had earned

From the lazy shiftless poor.

The black man’s face bespoke revenge

As the fire passed from his sight.

For all he saw in his stick of wood

Was a chance to spite the white.

The last man of this forlorn group

Did nought except for gain.

Giving only to those who gave

Was how he played the game.

Their logs held tight in death’s still hands

Was proof of human sin.

They didn’t die from the cold without

They died from the cold within.

Nick,

This was a very interesting pair of posts, and it sheds considerable light on what we know and what we don’t know about congestion from a technical perspective.

However, the initial policy question is readily answerable from the data. Was broadband discrimination occurring due to interconnection choices by Comcast? Yes.

As you note, discrimination via intentional interconnect congestion is a known tactic. Furthermore, congestion at interconnection can be sufficient to cause discrimination regardless of the state of the network elsewhere. If there were also connection at the transit providers (your Theory #2), it does not change this fact. Perhaps that would exacerbate the end-users’ poor broadband experience, but the baseline discrimination remains.

In such a scenario, interconnection congestion is the only congestion that policymakers should be concerned about. This is because the market structure is such that “terminating access monopoly” dynamics incentivize last-mile providers to abuse their position by discriminating through interconnection discrimination, whereas competitive market dynamics incentivize fair and efficient resolution of transit congestion. Determining how much transit congestion is only useful for he-said/she-said purposes and for better engineering… but not for resolving the policy questions about last-mile broadband discrimination.

Likewise, whether or not you call this set of policy questions “net neutrality” is immaterial. I have come to prefer “broadband discrimination,” (Wu’s alternative term that never caught on). Furthermore, even if the Open Internet Order addresses only paid prioritization, the Title II classification places the question within the FCC’s ambit.

You note that “it’s expected (and rational, economically speaking)” for Comcast to exercise its unique bargaining power through intentional last-mile congestion. It is rational, but is it efficient? In your first post, you say “Both sides of this argument are reasonable and plausible—this is a classic ‘peering dispute’.” I emphatically disagree. The lack of last-mile competition that you acknowledge, and the resulting incentives that I just described, make it far from a classic peering dispute. This was the focus of my 2010 rant, and my more recent 2014 rant.

I’m glad that you dismissed the silly claims by last-mile providers that Netflix is or would punish them by dumping traffic on to links of their choosing. The providers have been making this argument (and even claiming that edge providers can avoid discrimination by switching links) for some time.

Research about transit congestion can be very useful… but not for debates about last-mile broadband policy.

Hi Steve,

Thanks a lot for your very thoughtful comments. On most of your points I agree. On a few, I have a couple of points where I would differ, or at least clarify.

1. However, the initial policy question is readily answerable from the data. Was broadband discrimination occurring due to interconnection choices by Comcast? Yes.

I actually think my answer to your question is “no”, although some of this is perhaps semantics:

“Discrimination” implies that there is some network policy to preferentially treat some types of traffic over others. In fact, that is not what happened in this case. *All* traffic suffered as a result of the congestion along paths between Comcast and Netflix. That’s not discrimination.

“due to interconnection choices by Comcast”. This statement has a value judgment that suggests that it is Comcast’s “fault” for choosing not to directly connect to Netflix for free. But, interconnection is bilateral. If you extend the argument, you could say that anytime someone wants to connect directly to an access ISP and Comcast chooses not to connect, then it is “discrimination”. To suggest that Comcast is discriminating by exacting some fee for interconnection is not how I would characterize it; it is taking advantage of the bargaining leverage they have by virtue of their market position (which previous regulatory action helped entrench). We can bemoan the problems with monopolies in this situation, but to suggest that Comcast shouldn’t *ever* be able to charge content providers for interconnection also runs counter to interconnection economics. Should Comcast be able/allowed to charge? Probably. Do they derive value from connecting to Netflix, and should this be taken into account when figuring out how money should change hands? Probably. Should regulation play a role in enforcing that? Maybe, although perhaps focusing on competition might be addressing the problem, as opposed to regulating the symptom. Should Comcast bear the full cost of connecting to content providers? Almost certainly not.

2. In such a scenario, interconnection congestion is the only congestion that policymakers should be concerned about.

I don’t think I necessarily agree. First of all, it is impossible to have a reasoned policy discussion on this topic without data that gives us a clear picture of where the congestion is. Both scenarios are relevant: Netflix has a choice: (1) connect directly to Comcast (at some cost), or (2) connect through transit providers (at some cost).

If there is congestion in the transit providers themselves, the congestion also thrusts Netflix into negotiations for direct connections to Comcast, as opposed to being able to use the transit providers, which significantly weakens Netflix’s leverage in a peering dispute.

Second, if the congestion in transit providers coincided with a decision to build out the OpenConnect CDN, that is certainly relevant, since the choices that Netflix has about how to serve traffic (i.e., from which caches, to which eyeballs) also come into play as to where congestion arises, and whether it would show up in certain transit networks at all.

Third, the findings that Cogent is prioritizing both Netflix traffic (presumably to manage congestion) and MLab test traffic (presumably to make it look better by hiding evidence of congestion in its own network) seems highly relevant, particularly given the implications in the MLab report that congestion is occurring at interconnection. When a transit provider prioritizes the probe traffic, it’s going to make it more likely that analysis of the data points the finger at other parts of the network. This is both fishy, and relevant to the last-mile discussion.

Finally, as we can see from the studies referenced in the post, existing measurement techniques can’t actually differentiate the two anyway! Regardless of what’s technically possible right now, though, I think both are relevant, and a holistic approach may ultimately shed more light.

3. In your first post, you say “Both sides of this argument are reasonable and plausible—this is a classic ‘peering dispute’.” I emphatically disagree. The lack of last-mile competition that you acknowledge, and the resulting incentives that I just described, make it far from a classic peering dispute.

Fair enough. I meant that it is “classic” in the sense that both sides are arguing about how much one should pay the other for a shared interconnection. That said, it is “new”, because one of the sides in the dispute has tremendous bargaining leverage, by virtue of having a near-monopoly on eyeballs.

4. I’m glad that you dismissed the silly claims by last-mile providers that Netflix is or would punish them by dumping traffic on to links of their choosing.

I did dismiss them as unlikely in this specific case, but in general actually I’m not sure they are “silly”. See Section 9 of Norton’s Peering Playbook. People actually do play exactly these kinds of games, and that section of the playbook describes this tactic to a T, as well of some anecdotes where this has actually taken place. So, it is *possible*. However, my gut instinct is that the other case (incidental congestion due to lack of visibility about throughput) may be a more likely scenario in this case. (But, that’s totally a intuitive judgment that is not based on actual data; it would be better if we had data about this.)

The link you provided is a great example of the argument that the access ISPs are putting forth about Netflix being able to choose their transit providers. That’s true, and I think it also points to why the problems can’t be separated so cleanly. Netflix has several choices for getting traffic to Comcast. One is to pay transit providers; another is to connect directly. Whether transit networks are under-provisioned will affect whether Netflix has any choice in whether they will even be able to use the transit providers. Effectively, the congestion backs them into a situation where they have very little leverage, because they have no other choice to get to Comcast than via a direct connection. Then, the lack of competition in access ISPs pushes them further into a corner and weakens their position even further.

The lack of competition at the access is clearly an issue, but it is not clear that one can separate underprovisioned transit from the discussion entirely.

5. Research about transit congestion can be very useful… but not for debates about last-mile broadband policy.

Yes, I think technically it is interesting in its own right—particularly as it pertains to what is technically possible (or not) without some more data-sharing from providers themselves. But, per point #2 above, I think one has to look at congestion holistically, with both transit and access providers in the picture—event if for no other reason than we have no technical way of teasing the two apart at the moment.

Thanks again for a great discussion,

-Nick

Agreed, great conversation. It’s going to get too long if I reply all in one post, so I’ll take each of your numbers in turn:

Discrimination can be based on type or on source. In this case, Comcast arguably congested (and thus discriminated) all traffic from sources that route along the specific interconnect’s path. When Comcast identifies high-volume sources where it would benefit from leveraging its last-mile market power, it can congest. To be sure, there is collateral damage against non-targeted sources that also route on that path, but it’s still discrimination. It’s certainly not *all* traffic that terminates on their network.

I think it’s clear that Comcast chose not to upgrade the interconnection points. I don’t see the implicit value judgment in that text. Your issue seems to have more to do with “discrimination” (discussed above). But that is not a value judgment. Rather, it is a factual judgment.

I certainly don’t suggest that Comcast should not be able to charge a fee. There is a different between “some fee” and a market-inefficient fee. The fundamental problem is that market failure makes it impossible for the market to reach efficiency. Thus, I agree that guidelines or regulation are necessary. “Fun” indeed. However, congestion in the transit portion of the network does not bear directly on that question.

These are great points!

Yes, I think it’s fair to say that my quibble is with the choice of the word “discrimination”. More precisely, I think the suggestion that Comcast was “throttling” Netflix is something we actually don’t have data on. Throttling implies something very specific, such as the use of a traffic shaper (which they have done in the past for things like Bittorrent, by the way). But, so far at least, we do not have actual data to suggest that any throttling was taking place. Whether or not “discrimination” is taking place I think depends on how you define discrimination. But, I think if we both agree that there is “collateral damage” along that path, then your definition (and original statement) definitely holds some weight.

Comcast chose not to upgrade the interconnection points, sure. On the other hand, Cogent, Level 3, Tata, etc. equally chose not to upgrade the interconnection points with Comcast. To suggest that Comcast alone should bear the complete cost of that link upgrade is where I’m less comfortable. Should they bear some of that cost? Certainly—and it should have something to do with the value that Comcast derives from connecting to Netflix.

Put it this way: If Comcast subscribers garnered no value from being able to stream Netflix, then obviously they shouldn’t have to pay Netflix. But, clearly some of that monthly fee that Comcast subscribers pay is for the benefit of watching Netflix. The amount that Comcast should have to bear for the upgrade should somehow relate to the value that its customers derive from watching Netflix. The main issue still, I believe, is that if there were market competition, we wouldn’t have to figure out what that number should be; because there isn’t, we know that it’s more than zero and less than 100 percent, but exactly what should it be? If there were competition, of course, the market would answer this question for us. Vishal Misra at Columbia has done some nice work on this topic by exploring applications of the Shapley Value; there’s likely more to do.

Regardless, although I have some thoughts on these aspects, my expertise is more on the technical side of things. These posts were meant to shed some technical light on certain things that are more uncertain than some literature would lead the reader to believe. This post, in particular, was intended to point out that _technically speaking_ it’s harder to isolate congestion at the interconnection points than perhaps some have been led to believe. Making these inferences from the edge is hard.

I think ultimately this becomes an even more interesting question if it comes to the point where we can say that it is technically difficult (impossible?) to accurately pinpoint these congestion locations without requiring ISPs inside the network to shed some more light on the situation. Then what? 🙂

Interesting point! Transit provider congestion could exacerbate the market failure problem created by Comcast’s terminating access position, at least in the short term. On the other hand, it doesn’t fundamentally change anything about Comcast’s terminating access position with respect to Netflix — either indirectly via transit or via direct connection. In the long term, it might be irrelevant whether the market power is being leveraged directly or indirectly. (Incidentally, I wouldn’t refer to the direct as “peering” given that they are not peers — at least in traditional “settlement-free” interconnection parlance.)

I take you to be saying something similar to the first point: that the nature of transit congestion has an effect on Netflix’s relative market power. Maybe, at least in the short term.

Agreed.

Agreed, where there is incomplete information about interconnection congestion, and where better data about transit congestion can improve that information, it’s relevant. I had read your post to concede that interconnection congestion existed. However, where the degree is a matter of dispute that can be further clarified by data about transit congestion, it would indeed be useful.

My primary discomfort with a greater emphasis on transit congestion is that information about transit congestion is not necessary in order to do informed policymaking on last-mile broadband. Presuming that the policymaker can get accurate information about interconnection congestion, they need no more. Emphasizing transit congestion could turn into a rathole that actually stalls effective policymaking. Of course, it may be unrealistic to presume that the policymaker can get accurate information about interconnection congestion in the current regulatory environment.

“My primary discomfort with a greater emphasis on transit congestion is that information about transit congestion is not necessary in order to do informed policymaking on last-mile broadband. Presuming that the policymaker can get accurate information about interconnection congestion, they need no more. Emphasizing transit congestion could turn into a rathole that actually stalls effective policymaking.”

Let’s not do that! You are right that there is some element of transit congestion that may prove distracting. However, in the specific case of (say) the MLab report, the CAIDA report, etc. the main point of the post is just to point out that we cannot rule out congestion in other locations. The MLab report in particular does not necessarily point to interconnection congestion at all—much of what is observed could actually be congestion in Cogent (even though I doubt that is really the case, again my doubt is based on intuition, not on the data itself, which is inconclusive without traceroutes). That doesn’t exonerate (and should not distract from) the discussions about interconnection, but given all of this discussion about prioritization, why is there not more uproar about a transit provider who is not only implementing fast lanes, but openly admitted it?

I agree with you that interconnection at the edge is experiencing what appears to be a market failure, and that is perhaps the most important point. Yet, rather than take sides in that debate (since I am a scientist, not a policymaker), my primary argument is that using data that does not support these conclusions to make that point only harms credibility in the end. From a technical perspective, we need to be working towards (and advocating for) more illuminating data.

Policymakers like yourself can do the best job if we scientists can provide not only better methods for drawing conclusions, but also careful analysis about precisely what can and cannot be said with the measurements we have.

More data is good. However, incomplete data must not stand in the way of urgent policymaking when enough data and logic exists to do so. For example, the battle over “Form 477” data (broadband provider self-reporting about service coverage) has been waged for more than a decade, with last-mile providers using “proprietary” claims to limit disclosure and stall the process. There have been some recent improvements:

https://www.fcc.gov/document/notice-request-access-form-477-broadband-data-0

But the system is still broken. Did you see this article?

http://consumerist.com/2015/03/25/new-homeowner-has-to-sell-house-because-of-comcasts-incompetence-lack-of-competition/

Scientists have an interest in obtaining the best data possible, and when providers hold that data hostage, scientists are right to engage in the resulting policy debate.

I still think that the proposition is silly. As noted in the second half of Section 9 of the Peering Playbook, access ISPs like Comcast have ample methods for countering this. Thus, it serves as no meaningful counterweight to the last-mile market power. The last-mile network is not, in the wise words of Ted Stevens, “something that you just dump something on. It’s not a big truck.” It is a series of tubes. But all of those tubes terminate in the same place, so no matter where the traffic is dumped, the dumpee can control it.