This week saw an exciting announcement about the experimental deployment of DOCSIS 3.1 in limited markets in the United States, including Philadelphia, Atlanta, and parts of northern California, which will bring gigabit-per-second Internet speeds to many homes over the existing cable infrastructure. The potential for gigabit speeds over the existing cable networks bring hope that more consumers will ultimately enjoy much higher-speed Internet connectivity both in the United States and elsewhere.

This development is also a pointed response to the not-so-implicit pressure from the Federal Communications Commission to deploy higher-speed Internet connectivity, which includes other developments such as the redefinition of broadband to a downstream throughput rate of 25 megabits per second, up from a previous (and somewhat laughable) definition of 4 Mbps; many commissioners have also stated their intentions to raise the threshold for the definition of a broadband network to a downstream throughput of 100 Mbps, as a further indication that ISPs will see increasing pressure for higher speed links to home networks. Yet, the National Cable and Telecommunications Association has also claimed in an FCC filing that such speeds are far more than a “typical” broadband user would require.

These developments and posturing beg the question: How will consumers change their behavior in response to faster downstream throughput from their Internet service providers?

Ph.D. student Sarthak Grover, postdoc Roya Ensafi, and I set out to study this question with a cohort of about 6,000 Comcast subscribers in Salt Lake City, Utah, from October through December 2014. The study involved what is called a randomized controlled trial, an experimental method commonly used in scientific experiments where a group of users is randomly divided into a control group (whose user experience no change in conditions) and a treatment group (whose users are subject to a change in conditions). Assuming the cohort is large enough and represents a cross-section of the demographic of interest, and that the users for the treatment group are selected at random, it is possible to observe differences between the two groups’ outcomes and conclude how the treatment affects the outcome.

In the case of this specific study, the control group consisted of about 5,000 Comcast subscribers who were paying for (and receiving) 105 Mbps downstream throughput; the treatment group, on the other hand, comprised about 1,500 Comcast subscribers who were paying for 105 Mbps but at the beginning of the study period were silently upgraded to 250 Mbps. In other words, users in the treatment group were receiving faster Internet service but was unaware of the faster downstream throughput of their connections. We explored how this treatment affected user behavior and made a few surprising discoveries:

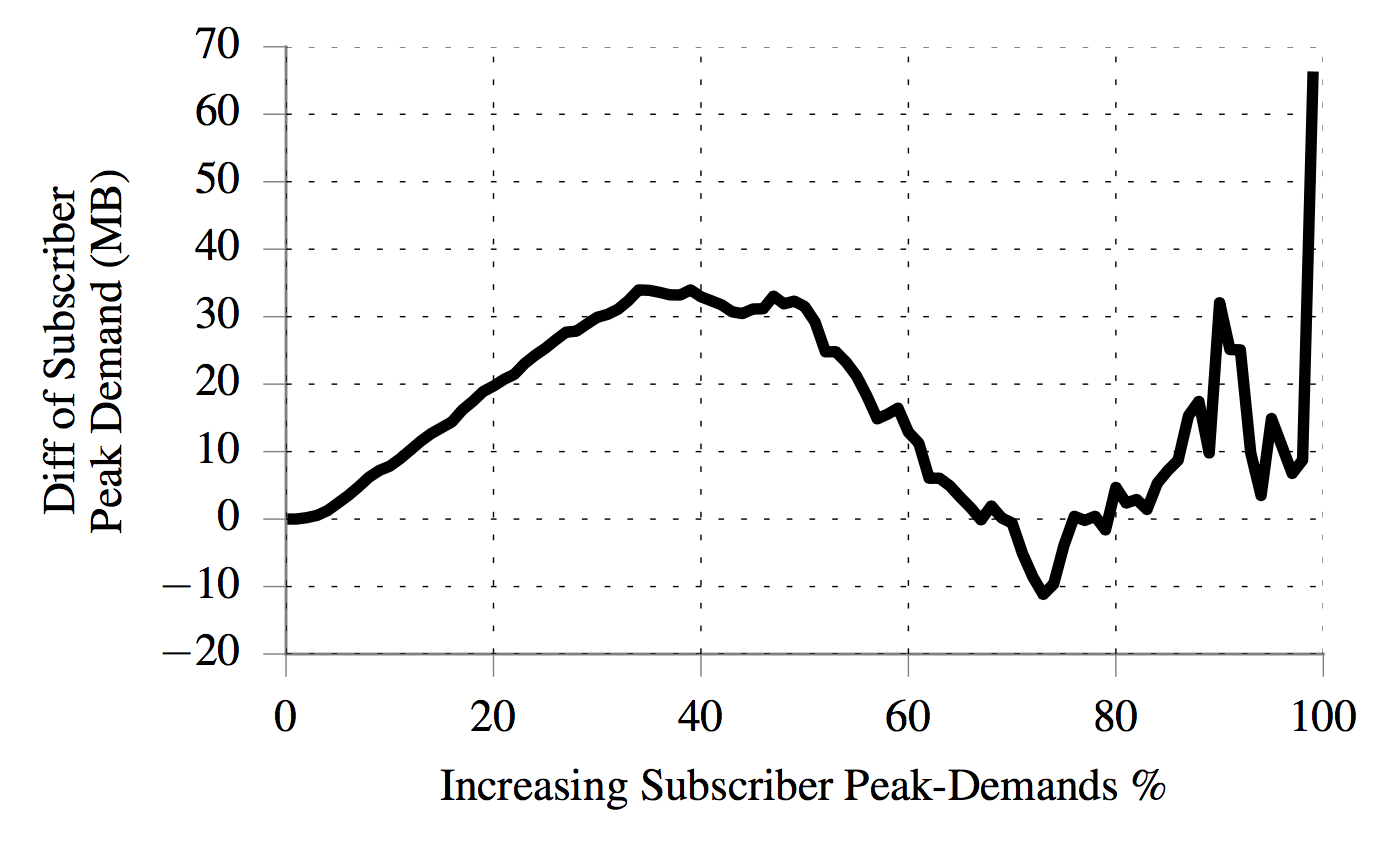

“Moderate” users tend to adjust their behavior more than the “heavy” users. We expected that subscribers who downloaded the most data in the 250 Mbps service tier would be the ones causing the largest difference in mean demand between the two groups of users (previous studies have observed this phenomenon, and we do observe this behavior for the most aggressive users). To our surprise, however, the median subscribers in the two groups exhibited much more significant differences in traffic demand, particularly at peak times. Notably, the 40% of subscribers with lowest peak demands more than double their daily peak traffic demand in response to service-tier upgrades (i.e., in the treatment group).

With the exception of the most aggressive peak-time subscribers, the subscribers who are below the 40th percentile in terms of peak demands increase their peak demand more than users who initially had higher peak demands.

This result suggests a surprising trend: it’s not the aggressive data hogs who account for most of the increased use in response to faster speeds, but rather the “typical” Internet user, who tends to use the Internet more as a result of the faster speeds. Our dataset does not contain application information, so it is difficult to say what, exactly is responsible for the higher data usage of the median user. Yet, the result uncovers an oft-forgotten phenomena of faster links: even existing applications that do not need to “max out” the link capacity (e.g., Web browsing, and even most video streaming) can benefit from a higher capacity link, simply because they will see better performance overall (e.g., faster load times and more resilience to packet loss, particularly when multiple parallel connections are in use). It might just be that the typical user is using the Internet more with the faster connection simply because the experience is better, not because they’re interested in filling the link to capacity (at least not yet!).

Users may use faster speeds for shorter periods of time, not always during “prime time”. There has been much ado about prime-time video streaming usage, and we most certainly see those effects in our data. To our surprise, the average usage per subscriber during prime-time hours was roughly the same between the treatment and control groups, yet outside of prime time, the difference in usage was much more pronounced between the two groups, with average usage per subscriber in the treatment group exhibiting 25% more usage than that in the control group for non-prime-time weekday hours. We also observe that the peak-to-mean ratios for usage in the treatment group are significantly higher than they are in the control group, indicating that users with faster speeds may periodically (and for short times) take advantage of the significantly higher speeds, even though they are not sustaining a high rate that exhausts the higher capacity.

These results are interesting for last-mile Internet service providers because they suggest that the speeds at the edge may not currently be the limiting factor for user traffic demand. Specifically, the changes in peak traffic outside of prime-time hours also suggest that even the (relatively) lower-speed connections (e.g., 105 Mbps) may be sufficient to satisfy the demands of users during prime-time hours. Of course, the constraints on prime-time demand (much of which is largely streaming) likely result from other factors, including both available content and perhaps the well-known phenomena of congestion in the middle of the network, rather than in the last mile. All of this points to the increasing importance of resolving the performance issues that we see as a result of interconnection. In the best case, faster Internet service moves the bottleneck from the last mile to elsewhere in the network (e.g., interconnection points, long-haul transit links); but, in reality, it seems that the bottlenecks are already there, and we should focus on mitigating those points of congestion.

Further reading and study. You’ll be able to read more about our study in the following paper: A Case Study of Traffic Demand Response to Broadband Service-Plan Upgrades. S. Grover, R. Ensafi, N. Feamster. Passive and Active Measurement Conference (PAM). Heraklion, Crete, Greece. March 2016. (We will post an update when the final paper is published in early 2016.) There is plenty of room for follow-up work, of course; notably, the data we had access to did not have information about application usage, and only reflected byte-level usage at fifteen-minute intervals. Future studies could (and should) continue to study the effects of higher-speed links by exploring how the usage of specific applications (e.g., streaming video, file sharing, Web browsing) changes in response to higher downstream throughput.

This is just bloatware. The whole industry is based on providing faster speeds that don’t really add anything useful. Notice also how this is coordinated by the government. The objective here is to keep corporatism from collapsing by forcing people to buy goods and services they don’t need, on credit.

Kreylix, it’s happening already in many markets where fiber is available already. DOCSIS 3.1 is important because it allows for these speeds without a massive infrastructure outlay; i.e., the speeds are possible over *existing* cable infrastructure.

tz, matt, manole: Agreed that the lower speeds are probably “enough” for most applications we use today (which was sort of the point of my post). One thing we’ve observed in other work is that *latency* is a performance bottleneck more than throughput, for many applications (in particular, downloading Web pages). A reasonable question is thus whether caching/CDN infrastructure might be a better investment, particularly for certain applications. Here’s that research:

http://conferences.sigcomm.org/imc/2013/papers/imc120-sundaresanA.pdf

-Nick

“How Will Consumers Use Faster Internet Speeds?” – This is Comcast…do you know how slow this rollout will be, if it ever even happens? So the real answer to your question is NA – consumers are unlikely to get these faster speeds. Because. Comcast.

Nice post, and informative.

Commenters: Thank you for sharing your perceptions of your connections.

Please appreciate that the article discusses two major factors; When, during the day, and by how much, do faster link speeds at the edges of the internet impact aggregate bandwidth growth? and Which users (and subsequent flows) are the biggest statistical beneficiaries of higher link speeds?

Whether the users notice the difference in feedback response (bandwidth-delay-product still improves with capacity even if latency remains static) or not, it is still impacting their behavior; which translates into traffic patterns.

I have gigabit connection, and I doesn’t differ from 100Mb which I think is enough.

i have a 50 mbps connection, which is more than enough. That being said, in a perfect world, copyright laws would be a non-issue, and a gigabit connection would allow you to download anything you please in whatever quality you choose, in a matter of minutes. The issue isn’t necessarily the infrastructure, it’s the companies and the government(s) that control it, as well as the laws that protect and prevent digital information from spreading.

Do I still have the same 250 gig / month data cap? Is this just a way to burn through it faster?

They won’t. I just turned back my gigabit to 100Mb. Except for a very few sites, NOTHING arrives at a gigabit. I’m already using ad-blockers. A web page with 2Mb of data (lots!) loads in a fraction of a second at 100Mb or 1G. I can’t watch multiple HD videos at once (It would be nice if I could do some at 2x speed with pitch correction, but then the codec would probably lower the data rate).

I can download an e-version of the local paper. It should take less than 2 seconds at a gigabit, but still takes over 10.

Another problem is latency. Before the firehose can turn on, the connection needs to do the 3 way handshake (or the less common variants), and that will cause a fixed delay. Not everything supports spdy or similar extension.

I have a 4k TV/Monitor, but don’t do UHD, I have Amazon Prime but don’t use it in HD, and it doesn’t consume gigabits.

Even YouTube simply loads a little bit of the video and stops.

There is a mapping database that is updated roughly monthly, but it only allows a half-dozen connections per IP, and throttles it at their end to about 10Mbit, so although I theoretically can download in a few minutes, it still takes hours.

BitTorrent downloading the latest linux distro to burn on to a DVD is about the only thing which I found is fast.

i’m an outlier, but I think even with a huge pipe on your end, most of the time you get a trickle because of the far end.

I’m disappointed, but since apparently the sources of the content I access can rarely do over 50Mbit.