How can we design remedies for content “violations” online?

Speaking today at CITP is Eric Goldman (@ericgoldman), a professor of law and co-director of the High Tech Law Institute, at Santa Clara University School of Law. Before he became a full-time academic in 2002, Eric practiced Internet law for eight years in the Silicon Valley. His research and teaching focuses on Internet, IP and advertising law topics, and he blogs on these topics at the Technology & Marketing Law Blog.

Eric reminds us that content moderation questions are front page stories every week. Lawmakers and tech companies are wondering how to create a world where everyone can have their say, people have a chance to hear from them, and people are protected from harms.

Decisions about content moderation depend on a set of questions, says Eric:

“What rules govern online content?” “Who creates those rules? Who adjudicates rule violations?” Eric is most interested in a final question: “what consequences are imposed for rule violations?

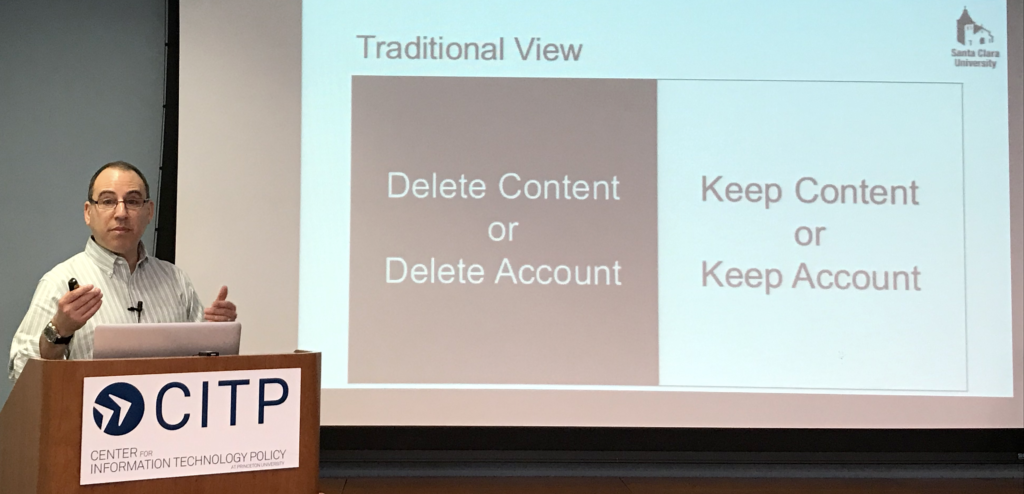

So what do should we do once a content violation has been observed? The traditional view is to delete the content or account or to keep the content and account. For example, under the Digital Millennium Copyright Act, platforms are required to “remove or disable access to” copyrighted material. It allows no option less than removing the material from visibility. The DMCA also specifies two other remedies: terminating “repeat infringers” and issue subpoenas to identify/unmask alleged infringers. Overall however, the primary intervention is to remove things, and there’s no lesser action

Next Eric, tells us about civil society principles that adopt a similar idea of removal as the primary remedy. For example, the Manila Principles on Intermediary Liability assume that removal is the one available intervention, but that it should be necessary, proportional, and adopt “the least restrictive technical means.” Similarly, the Santa Clara Principles assume that removal is the one available option.

Eric reminds us that there are many remedies between removal and keeping content. Why should we pay attention to them? With a wider range of options, we can (a) avoid collateral damage from overbroad remedies and develop a (b) broader remedy toolkit to match the needs of different communities. With a wider palette of options, we would also need principles for choosing between those remedies. Eric wants to be able to suggest options that regulators or platforms have at their disposal when making policy decisions.

To illustrate the value of being able to differentiate between remedies, Eric talks about communities that have rich sets of rules with a range of consequences other than full approval or removal, such as churches, fraternities, and sports leagues.

Eric then offers us a taxonomy of remedies, drawn from examples in use online: (a) content restrictions, (b) account restrictions, (c) visibility reductions, (d) financial levers, and (e) other.

Eric asks: once we have listed remedies, how could we possibly choose among them? Eric talks about different theories for choosing – and he doesn’t think that those models are useful for this conversation. Furthermore, conversations about government-imposed remedies are different from internet content violations.

Unlike internet content policies, says Eric, government remedies:

- are determined by elected officials

- funded by taxes

- non-compliance is enforced by police power

- some remedies are only available to the government (like jail/death)

- are subject to constitutional limits

Finally, Eric shares some early thoughts about how to choose among possible remedies:

- Remedy selection manifests a service’s normative priorities, which differ

- Possible questions to ask when choosing among remedies:

- How bad is the rule violation?

- How confident is the service that the rule was actually violated?

- How open is the community?

- How will the remedy affect other community members?

- How to balance between behavior conformance with user engagement?

- Site design can prevent violations

- Educate and socialize contributors (for example)

- Services with only binary remedies aren’t well-positioned to solve problems, and maybe other actors are in a better position

- Typically, private remedies are better than judicially imposed remedies, but at cost of due process

- Remedies should be necessary & proportionate

- Remedies should empower users to choose for themselves what to do

Is there a video recording of the talk?