It has been understood for decades that it’s practically impossible to secure your computer (or computer-based device such as a voting machine) from attackers who have physical access. The basic principle is that someone with physical access doesn’t have to log in using the password, they can just unscrew your hard drive (or SSD, or other memory) and read the data, or overwrite it with modified data, modified application software, or modified operating system. This is an example of an “Evil Maid” attack, in the sense that if you leave your laptop alone in your hotel room while you’re out, the cleaning staff could, in principle, borrow your laptop for half an hour and perform such attacks. Other “Evil Maid” attacks may not require unscrewing anything, just plug into the USB port, for example.

And indeed, though it may take a lot of skill and time to design the attack, anyone can be trained to carry it out. Here’s how to do it on an unsophisticated 1990s-era voting machine (still in use in New Jersey):

More than twenty years ago, computer companies started implementing protections against these attacks. Full-disk encryption means that the data on the disk isn’t readable without the encryption key. (But that key must be present somewhere in your computer, so that it can access the data!) Trusted platform modules (TPM) encapsulate the encryption key, so attackers (even Evil Maids) can’t get the key. So in principle, the attacker can’t “hack” the computer by installing unauthorized software on the disk. (TPMs can serve other functions as well, such as “attestation of the boot process,” but here I’m focusing on their use in protecting whole-disk encryption keys.)

So it’s worth asking, “how well do these protections work?” If you’re running a sophisticated company and you hire a well-informed and competent CIO to implement best practices, can you equip all your employees with laptops that resist evil-maid attacks? And the answer is: It’s still really hard to secure your computers against determined attackers.

In this article, “From stolen laptop to inside the company network,” the Dolos Group (a cybersecurity penetration-testing firm) documents an assessment they did for an unnamed corporate client. The client asked, “if a laptop is stolen, can someone use it to get into our internal network?” The fact that the client was willing to pay money to have this question answered, already indicates how serious this client is. And, in fact, Dolos starts their report by listing all the things the client got right: There are many potential entry points that the client’s cybersecurity configuration had successfully shut down.

Indeed, this laptop had full disk encryption (FDE); it had a TPM (trusted platform module) to secure the FDE encryption key; the BIOS was configured well, locked with a BIOS password, attack pathways via NetBIOS Name Service were shut down, and so on. But there was a vulnerability in the way that FDE talked to TPM over the SPI bus. And if that last sentence doesn’t speak to you, then how about this: They found one chip on the motherboard (labeled CMOS in the picture),

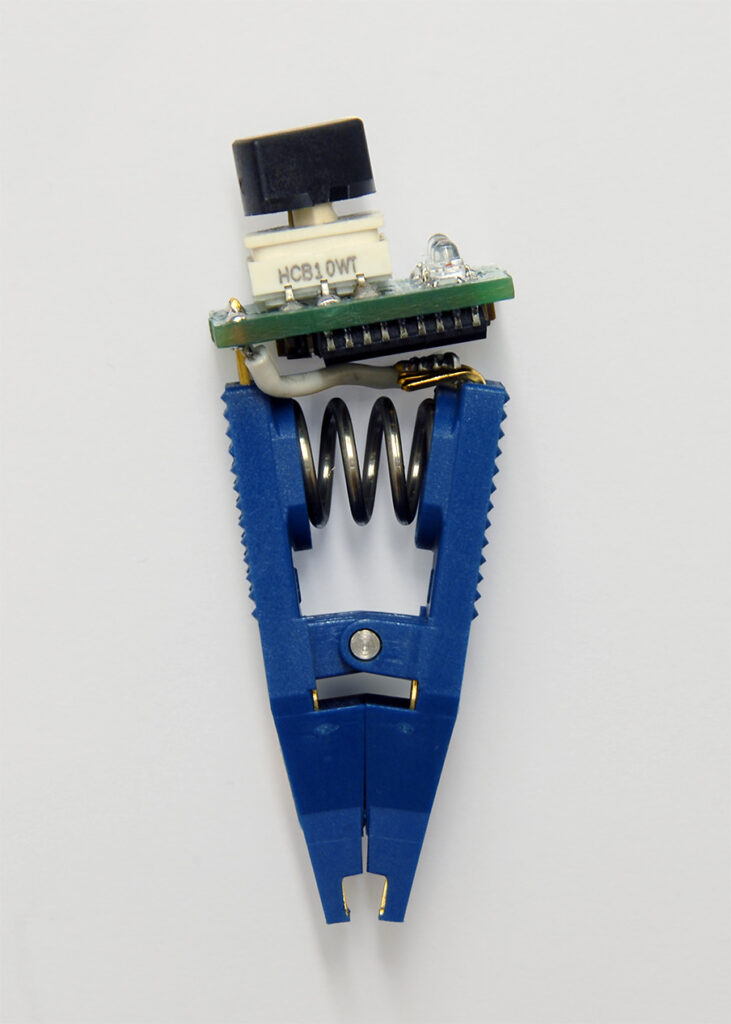

that was listening in on the conversation between the trusted platform module and the full-disk encryption. They built a piece of equipment; they could give their equipment to an Evil Maid who could clip one of these onto the CMOS chip:

and in a few seconds the Evil Maid could learn the secret key; then (in a few minutes) read the entire (decrypted) disk drive, or install a new operating system to run in Virtualized mode. FDE has been made irrelevant, so the TPM is also irrelevant.

Then, the attacker can get into the corporate network. Or, what Dolos doesn’t describe, is that the attacker could install spyware or malware into the hard drive, remove the blue clip, screw the cover back on, and return the laptop to the hotel room.

This vulnerability can be patched over; but computer systems are very complex these days; there will almost always be another security slip-up.

And what about voting machines? Are voting machines well protected by TPM and FDE, and can the protections in voting machines be bypassed? For voting machines, the Evil Maid is not a hotel employee, it may be a corrupt election warehouse worker, a corrupt pollworker at 6am, or anyone who has unattended access to the voting machine for half an hour. In many jurisdictions, voting machines are left unattended at polling places before and after elections.

We would like to know, “Is the legitimate vote-counting program installed in the voting machine, or has some hacker replaced it with a cheating program?”

One way the designer/vender of a voting machine could protect the firmware (operating system and vote-counting program) against hacking is, “store it in an whole-disk-encrypted drive, and lock the key inside the TPM.” This is supposed to work, but the Dolos report shows that in practice there tend to be slip-ups.

As an alternative to FDE+TPM that I’ve described above, there are other ways to (try to) ensure that the right firmware is running; they have names such as “Secure Boot” and “Trusted Boot”, and use hardware such as UEFI and TPM. Again, ideally they are supposed to be secure; in practice they’re a lot more secure than doing nothing; but in the implementation there may be slip-ups.

The new VVSG 2.0, the “Voluntary Voting Systems Guidelines 2.0” in effect February 2021, requires cryptographic boot verification (see section 14.13.1-A) — that is, “cryptographically verify firmware and software integrity before the operating system is loaded into memory.” But the VVSG 2.0 doesn’t require anything as secure as (hardware-assisted) “Secure Boot” or “Trusted Boot”. They say, “This requirement does not mandate hardware support for cryptographic verification” and “Verifying the bootloader itself is excluded from this requirement.” That leaves voting machines open to the kind of security gap described in Voting Machine Hashcode Testing: Unsurprisingly insecure, and surprisingly insecure. That wasn’t just a slip-up, it was a really insecure policy and practice.

And by the way, no voting machines have yet been certified to VVSG 2.0, and there’s not even a testing lab that’s yet accredited to test voting machines to the 2.0 standard. Existing voting machines are certified to a much weaker VVSG 1.0 or 1.1 that doesn’t even consider these issues.

Even the most careful and sophisticated Chief Information Officers using state-of-the-art practices find it extremely difficult to secure their computers against Evil Maid attacks. And there has never been evidence that voting-machine manufacturers are among the most careful and sophisticated cyberdefense practitioners. Most voting machines are made to old standards that have zero protection against Evil Maid attacks; the new standards require Secure Boot but in a weaker form than TPMs; and no voting machines are even qualified to those new standards.

Here’s an actual voting-machine hacking device made by scientists studying India’s voting machines. Just turn the knob on top to program which candidate you want to win:

Wholesale attacks on Election Management computers

And really, the biggest danger is not a “retail” attack on one machine by an Evil Maid; it’s a “wholesale” attack that penetrates a corporate network (of a voting-machine manufacturer) or a government network (of a state or county running an election) and “hacks” thousands of voting machines all at once. The Dolos report can reminds us again why it’s a bad idea for voting machines to “phone home” on a cell-phone network to connect themselves to the internet (or to a corporate or county network): it’s not only that this exposes the voting machine to hackers anywhere on the internet, it also allows the voting machine (hacked by an Evil Maid attack) to attack the county network it phones up.

Even more of a threat is that an attacker with physical access to an Election Management System (that is, state or county computer used to manage elections) can spread malware to all the voting machines that are programmed by the EMS. How hard is it to hack into an EMS? Just like the PC that Dolos hacked into, an EMS is just a laptop computer; but the county or state that owns it may not be as security-expert as Dolos’s client is. Likely enough, the EMS is not hard to hack into, with physical access.

Conclusion: Don’t let your security depend entirely on “an attacker with physical access still can’t hack me.”

So you can’t be sure what vote-counting (or vote-stealing) software is running in your voting machine. But we knew that already. Our protection, for accurate vote counts, is to vote on hand-marked paper ballots, counted by optical-scan voting machines. If those optical-scan voting machines are hacked, by an Evil Maid, by a corrupt election worker, or by anyone else who gains access for half an hour, then we can still be protected by the consistent use of Risk-Limiting Audits (RLAs) to detect when the computers claim results different from what’s actually marked on the ballots; and by recounting those paper ballots by hand, to correct the results. More states should consistently use RLAs.

The use of hand-marked paper ballots with routine RLAs can protect us from wholesale attacks. But it would be better to have, in addition, proper cybersecurity hygiene in election management computers and voting machines.

I thank Ars Technica for bringing the Dolos report to my attention. Their article concludes with many suggestions made by security experts for shutting down the particular vulnerability that Dolos found. But remember, even though you can (with expertise) shut down some particular loophole, you can’t know how many more are out there.

I wish we were at the point where an evil maid would need TPM sniffing. The majority of election systems in the field still do not use the TPM or Bitlocker. When systems offer it, it is optional and requires extra time and effort to set up. And I’m not aware of any system that uses the TPM for election-specific keys.

Your criticism of the VVSG 2.0 requirement on cryptographic boot verification is fair, but the topic was discussed at some length during development.

> 14.3.1-A – Cryptographic boot verification

> The voting system must cryptographically verify system integrity before the operating system is loaded into memory.

>

> Discussion:

> This requirement does not mandate hardware support. This requirement could be met by trusted boot, but other

> software-based solutions exist. This includes a software bootloader cryptographically verifying the OS prior to

> execution. Verifying the bootloader itself is excluded from this requirement, but not prohibited.

The idea was to raise the minimum requirements significantly without being overly prescriptive. The requirement must be met, but vendors get flexibility in how they meet it. The task of the cybersecurity working group wasn’t to weigh the risks around particular implementations. And did we did not want to rule out future innovations (in case VVSG 2.0 is still used 15 years from now).

The VVSG 2.0 test assertion for 14.3.1-A will be significant in determining what an actual implementation must include. The current test assertions do not include any test for it, but my understanding is that more are being added. Unfortunately, the cybersecurity working group is not part of that process.