Machine learning is eating the world. The abundance of training data has helped ML achieve amazing results for object recognition, natural language processing, predictive analytics, and all manner of other tasks. Much of this training data is very sensitive, including personal photos, search queries, location traces, and health-care records.

In a recent series of papers, we uncovered multiple privacy and integrity problems in today’s ML pipelines, especially (1) online services such as Amazon ML and Google Prediction API that create ML models on demand for non-expert users, and (2) federated learning, aka collaborative learning, that lets multiple users create a joint ML model while keeping their data private (imagine millions of smartphones jointly training a predictive keyboard on users’ typed messages).

Our Oakland 2017 paper, which has just received the PET Award for Outstanding Research in Privacy Enhancing Technologies, concretely shows how to perform membership inference, i.e., determine if a certain data record was used to train an ML model. Membership inference has a long history in privacy research, especially in genetic privacy and generally whenever statistics about individuals are released. It also has beneficial applications, such as detecting inappropriate uses of personal data.

We focus on classifiers, a popular type of ML models. Apps and online services use classifier models to recognize which objects appear in images, categorize consumers based on their purchase patterns, and other similar tasks. We show that if a classifier is open to public access – via an online API or indirectly via an app or service that uses it internally – an adversary can query it and tell from its output if a certain record was used during training. For example, if a classifier based on a patient study is used for predictive health care, membership inference can leak whether or not a certain patient participated in the study. If a (different) classifier categorizes mobile users based on their movement patterns, membership inference can leak which locations were visited by a certain user.

There are several technical reasons why ML models are vulnerable to membership inference, including “overfitting” and “memorization” of the training data, but they are a symptom of a bigger problem. Modern ML models, especially deep neural networks, are massive computation and storage systems with millions of high-precision floating-point parameters. They are typically evaluated solely by their test accuracy, i.e., how well they classify the data that they did not train on. Yet they can achieve high test accuracy without using all of their capacity. In addition to asking if a model has learned its task well, we should ask what else has the model learned? What does this “unintended learning” mean for the privacy and integrity of ML models?

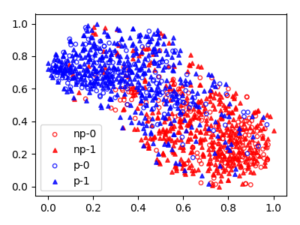

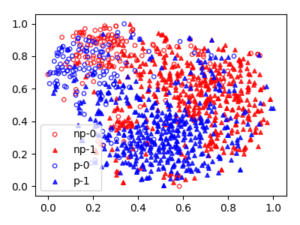

Deep networks can learn features that are unrelated – even statistically uncorrelated! – to their assigned task. For example, here are the features learned by a binary gender classifier trained on the “Labeled Faces in the Wild” dataset.

While the upper layer of this neural network has learned to separate inputs by gender (circles and triangles), the lower layers have also learned to recognize race (red and blue), a property uncorrelated with the task.

Our more recent work on property inference attacks shows that even simple binary classifiers trained for generic tasks – for example, determining if a review is positive or negative or if a face is male or female – internally discover fine-grained features that are much more sensitive. This is especially important in collaborative and federated learning, where the internal parameters of each participant’s model are revealed during training, along with periodic updates to these parameters based on the training data.

We show that a malicious participant in collaborative training can tell if a certain person appears in another participant’s photos, who has written the reviews used by other participants for training, which types of doctors are being reviewed, and other sensitive information. Notably, this leakage of “extra” information about the training data has no visible effect on the model’s test accuracy.

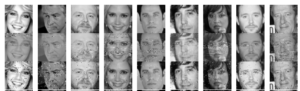

A clever adversary who has access to the ML training software can exploit the unused capacity of ML models for nefarious purposes. In our CCS 2017 paper, we show that a simple modification to the data pre-processing, without changing the training procedure at all, can cause the model to memorize its training data and leak it in response to queries. Consider a binary gender classifier trained in this way. By submitting special inputs to this classifier and observing whether they are classified as male or female, the adversary can reconstruct the actual images on which the classifier was trained (the top row is the ground truth):

Federated learning, where models are crowd-sourced from hundreds or even millions of users, is an even juicier target. In a recent paper, we show that a single malicious participant in federated learning can completely replace the joint model with another one that has the same accuracy but also incorporates backdoor functionality. For example, it can intentionally misclassify images with certain features or suggest adversary-chosen words to complete certain sentences.

When training ML models, it is not enough to ask if the model has learned its task well. Creators of ML models must ask what else their models have learned. Are they memorizing and leaking their training data? Are they discovering privacy-violating features that have nothing to do with their learning tasks? Are they hiding backdoor functionality? We need least-privilege ML models that learn only what they need for their task – and nothing more.

This post is based on joint research with Eugene Bagdasaryan, Luca Melis, Reza Shokri, Congzheng Song, Emiliano de Cristofaro, Deborah Estrin, Yiqing Hua, Thomas Ristenpart, Marco Stronati, and Andreas Veit.

Thanks to Arvind Narayanan for feedback on a draft of this post.